Quick Start for Interactive Live Streaming in Android

VideoSDK empowers you to seamlessly integrate interactive live streaming features into your Android application in minutes. While built for meetings, the SDK is easily adaptable for live streaming with support for up to 100 hosts/co-hosts and 2,000 viewers in real-time. Perfect for social use cases, this guide will walk you through integrating live streaming into your app.

For standard live streaming with 6-7 second latency and playback support, follow this documentation.

Prerequisites

Before proceeding, ensure that your development environment meets the following requirements:

- Android Studio Arctic Fox (2020.3.1) or later.

- Android SDK API Level 21 or higher.

- A mobile device that runs Android 5.0 or later.

Getting Started with the Code!

Follow the steps to create the environment necessary to add video calls into your app. Also you can find the code sample for quickstart here.

Important Changes Android SDK in Version v0.2.0

- The following modes have been deprecated:

CONFERENCEhas been replaced bySEND_AND_RECVVIEWERhas been replaced bySIGNALLING_ONLY

Please update your implementation to use the new modes.

⚠️ Compatibility Notice:

To ensure a seamless meeting experience, all participants must use the same SDK version.

Do not mix version v0.2.0 + with older versions, as it may cause significant conflicts.

Create new Android Project

For a new project in Android Studio, create a Phone and Tablet Android project with an Empty Activity.

After creating the project, Android Studio automatically starts gradle sync. Ensure that the sync succeeds before you continue.

Integrate Video SDK

- Maven Central

- Jitpack

dependencyResolutionManagement {

repositories {

google()

mavenCentral()

maven {url= uri("https://maven.aliyun.com/repository/jcenter")}

}

}

dependencyResolutionManagement{

repositories {

google()

maven { url = uri("https://www.jitpack.io") }

mavenCentral()

maven {url= uri("https://maven.aliyun.com/repository/jcenter")}

}

}

- Add the following dependency in your app's

app/build.gradle.

dependencies {

implementation ("live.videosdk:rtc-android-sdk:0.3.0")

// library to perform Network call to generate a stream id

implementation ("com.amitshekhar.android:android-networking:1.0.2")

// other app dependencies

}

Android SDK compatible with armeabi-v7a, arm64-v8a, x86_64 architectures. If you want to run the application in an emulator, choose ABI x86_64 when creating a device.

Add permissions into your project

- In

/app/Manifests/AndroidManifest.xml, add the following permissions.

<uses-feature android:name="android.hardware.camera" android:required="false" />

<uses-permission android:name="android.permission.INTERNET"/>

<uses-permission android:name="android.permission.CAMERA"/>

<uses-permission android:name="android.permission.RECORD_AUDIO"/>

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS"/>

If your project has set android.useAndroidX=true, then set android.enableJetifier=true in the gradle.properties file to migrate your project to AndroidX and avoid duplicate class conflict.

Structure of the project

Your project structure should look like this.

app/

├── src/main/

│ ├── java/com/example/app/

│ │ ├── components/

│ │ ├── screens/

│ │ ├── ui/

│ │ │ └── theme/

│ │ ├── model/

│ │ ├── MainApplication.kt

│ │ ├── MainActivity.kt

│ │ └── NetworkClass.kt

| | └── StreamingMode.kt

│ ├── res/

│ └── AndroidManifest.xml

├── build.gradle

└── settings.gradle

App Architecture

Step 1: Initialize VideoSDK

- Create

MainApplicationclass which will extend theandroid.app.Application.

- Create field

sampleTokeninMainApplicationwhich will hold the generated token from the VideoSDK dashboard. This token will use in VideoSDK config as well as generating meetingId.

import android.app.Application

import live.videosdk.rtc.android.VideoSDK

class MainApplication: Application() {

val sampleToken = "YOUR_TOKEN" //paste your token here

override fun onCreate() {

super.onCreate()

VideoSDK.initialize(applicationContext)

}

}

- Add

MainApplicationtoAndroidManifest.xml

<application

android:name=".MainApplication"

<!-- ... -->

</application>

Step 2: Managing Permissions and Setting Up Navigation

The MainActivity handles permission requests for the camera and microphone and sets up navigation using Jetpack Compose.

Upon creation, it checks for required permissions (RECORD_AUDIO and CAMERA). If not granted, it requests them. Once permissions are granted, the MyApp composable is initialized, which contains the navigation logic through the NavigationGraph.

The NavigationGraph defines two screens:

- JoinScreen: For joining or create stream.

- StreamingScreen: For participating in a stream, passing meetingId and mode as arguments.

class MainActivity : ComponentActivity() {

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

checkSelfPermission(REQUESTED_PERMISSIONS[0], PERMISSION_REQ_ID)

checkSelfPermission(REQUESTED_PERMISSIONS[1], PERMISSION_REQ_ID)

setContent {

Videosdk_android_modes_quickstartTheme {

MyApp(this) } }

}

private fun checkSelfPermission(permission: String, requestCode: Int): Boolean {

if (ContextCompat.checkSelfPermission(this, permission) != PackageManager.PERMISSION_GRANTED) {

ActivityCompat.requestPermissions(this, REQUESTED_PERMISSIONS, requestCode)

return false

}

return true

}

companion object {

private const val PERMISSION_REQ_ID = 22

private val REQUESTED_PERMISSIONS = arrayOf(Manifest.permission.RECORD_AUDIO, Manifest.permission.CAMERA)

}

}

@Composable

fun MyApp(context: Context) {

NavigationGraph(context = context)

}

@Composable

fun NavigationGraph(navController: NavHostController = rememberNavController(), context: Context) {

NavHost(navController = navController, startDestination = "join_screen") {

composable("join_screen") { JoinScreen(navController, context) }

composable("stream_screen?streamId={streamId}&mode={mode}") { backStackEntry ->

val streamId = backStackEntry.arguments?.getString("streamId")

val modeStr = backStackEntry.arguments?.getString("mode")

val mode = StreamingMode.valueOf(modeStr ?: StreamingMode.SendAndReceive.name)

streamId?.let {

StreamingScreen(viewModel = StreamViewModel(), navController, streamId, mode, context)

}

}

}

}

Step 3: Creating Reusable Components

- Before building the individual screens, it's essential to develop reusable composable components that will be used throughout the project.

- Create a

ReusableComponents.ktfile to organize all reusable components for the project, such as buttons, spacers, and customizable text.

@Composable

fun MyAppButton(task: () -> Unit, buttonName: String) {

Button(onClick = task) {

Text(text = buttonName) }

}

@Composable

fun MySpacer() {

Spacer(modifier = Modifier.fillMaxWidth()

.height(1.dp)

.background(color = Color.Gray)) }

@Composable

fun MyText(text: String, fontSize: TextUnit = 23.sp) {

Text(text = text,

fontSize = fontSize,

fontWeight = FontWeight.Normal,

modifier = Modifier.padding(4.dp),

style = MaterialTheme.typography.bodyMedium.copy(fontSize = 16.sp),

color = MaterialTheme.colorScheme.onSurface.copy(alpha = 0.7f))

}

- Create a

ParticipantVideoView.ktfile that includes two composable functions:ParticipantVideoViewfor rendering individual participant video streams, andParticipantsGridfor displaying a grid of participant videos.

- Here the participant's video is displayed using

VideoView. To know more aboutVideoView, please visit here VideoViewis a custom View. Since Jetpack Compose does not natively support traditionalViewobjects, we need to integrate theVideoViewinto the Compose layout using theAndroidViewwrapper.AndroidView()is a composable that can be used to add Android views inside a @Composable function.

@Composable

fun ParticipantVideoView(

participant: Participant

) {

var isVideoEnabled by remember { mutableStateOf(false) }

// Remember the event listener to prevent recreation on each recomposition

val eventListener = remember(participant) {

object : ParticipantEventListener() {

override fun onStreamEnabled(stream: Stream) {

if (stream.kind.equals("video", ignoreCase = true)) {

val videoTrack = stream.track as VideoTrack

isVideoEnabled = true

}

}

override fun onStreamDisabled(stream: Stream) {

if (stream.kind.equals("video", ignoreCase = true)) {

isVideoEnabled = false

}

}

}

}

// Add and remove the event listener using side effects

DisposableEffect(participant, eventListener) {

participant.addEventListener(eventListener)

onDispose { participant.removeEventListener(eventListener) }

}

// Initial video state check

LaunchedEffect(participant) {

val hasVideoStream = participant.streams.any { (_, stream) ->

stream.kind.equals("video", ignoreCase = true) && stream.track != null

}

isVideoEnabled = hasVideoStream

}

Box(

modifier = Modifier.fillMaxWidth()

.height(200.dp)

.background(if (isVideoEnabled) Color.DarkGray else Color.Gray)

) {

AndroidView(

factory = { context ->

VideoView(context).apply {

for ((_, stream) in participant.streams) {

if (stream.kind.equals("video", ignoreCase = true)) {

val videoTrack = stream.track as VideoTrack

addTrack(videoTrack)

isVideoEnabled = true

}

}

}

},

update = { videoView ->

// Handle video track updates only

// The event listener is now managed separately

for ((_, stream) in participant.streams) {

if (stream.kind.equals(

"video",

ignoreCase = true

) && stream.track != null

) {

val videoTrack = stream.track as VideoTrack

videoView.addTrack(videoTrack)

}

}

},

modifier = Modifier.fillMaxSize()

)

if (!isVideoEnabled) {

Box(

modifier = Modifier.fillMaxSize()

.background(Color.DarkGray),

contentAlignment = Alignment.Center

) {

Text(text = "Camera Off", color = Color.White)

}

}

Box(

modifier = Modifier.align(Alignment.BottomCenter)

.fillMaxWidth()

.background(Color(0x99000000))

.padding(4.dp)

) {

Text(

text = participant.displayName,

color = Color.White,

modifier = Modifier.align(Alignment.Center)

)

}

}

}

@Composable

fun ParticipantsGrid(

participants: List<Participant>,

modifier: Modifier = Modifier

) {

LazyVerticalGrid(columns = GridCells.Fixed(2),

verticalArrangement = Arrangement.spacedBy(16.dp),

horizontalArrangement = Arrangement.spacedBy(16.dp),

modifier = modifier.fillMaxWidth()

.padding(8.dp)

) {

items(participants.size) { index ->

ParticipantVideoView(participant = participants[index])

}

}

}

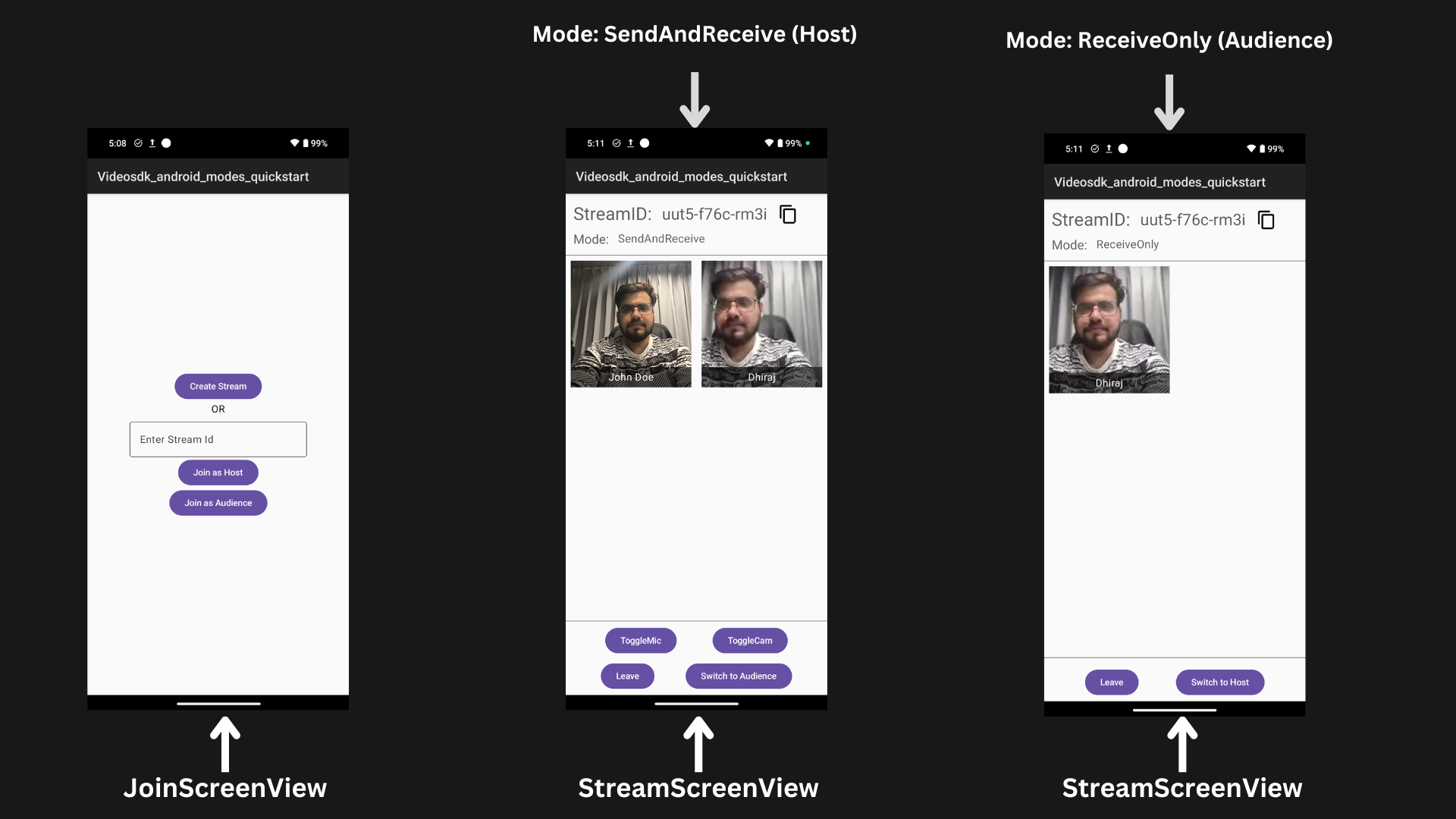

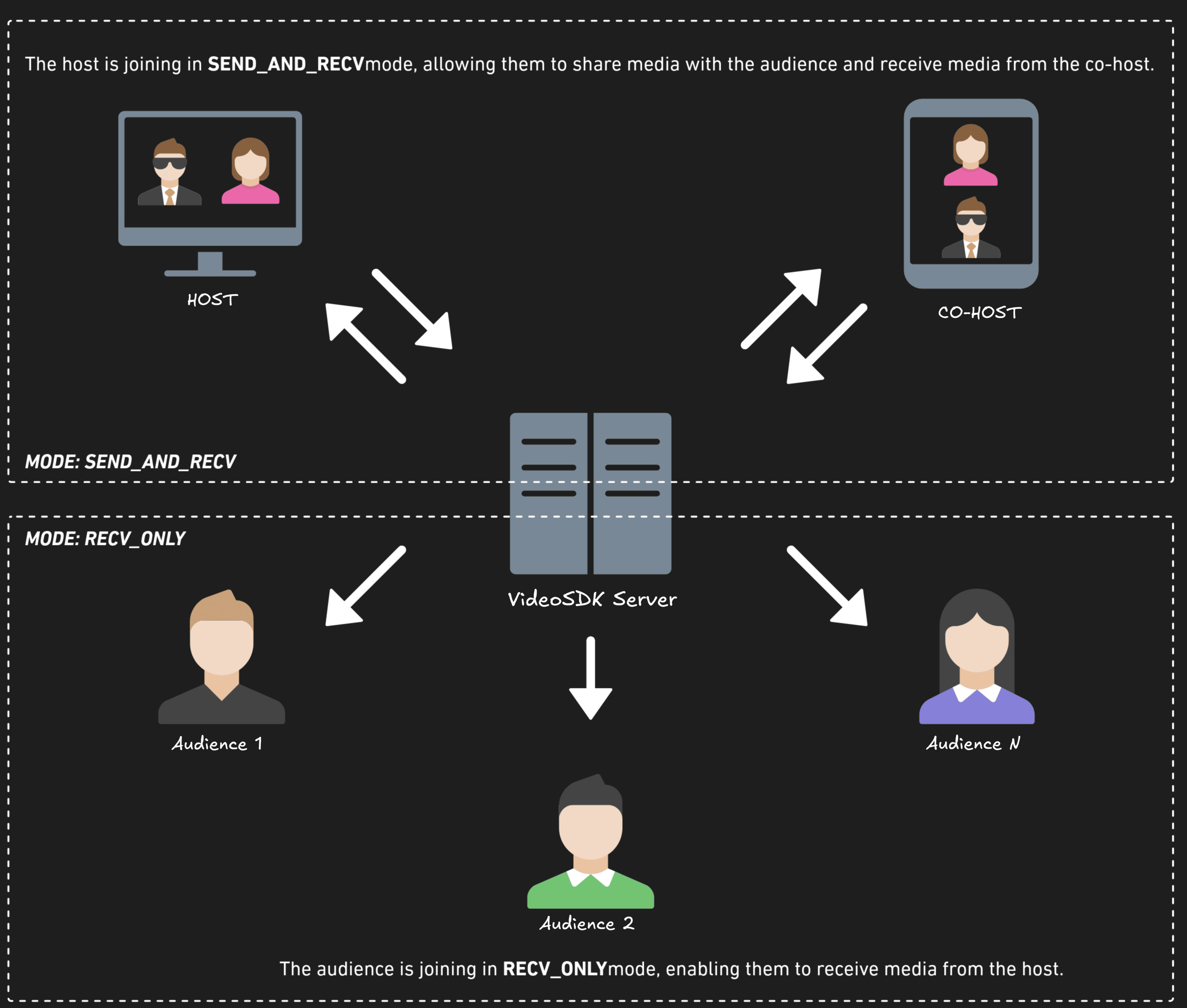

Before proceeding, let's understand the two modes of a Live Stream:

- SEND_AND_RECV:

- Designed primarily for the Host or Co-host.

- Allows sending and receiving media.

- Hosts can broadcast their audio/video and interact directly with the audience.

- RECV_ONLY:

- Tailored for the Audience.

- Enables receiving media shared by the Host.

- Audience members can view and listen but cannot share their own media.

enum class StreamingMode {

SendAndReceive,

ReceiveOnly

}

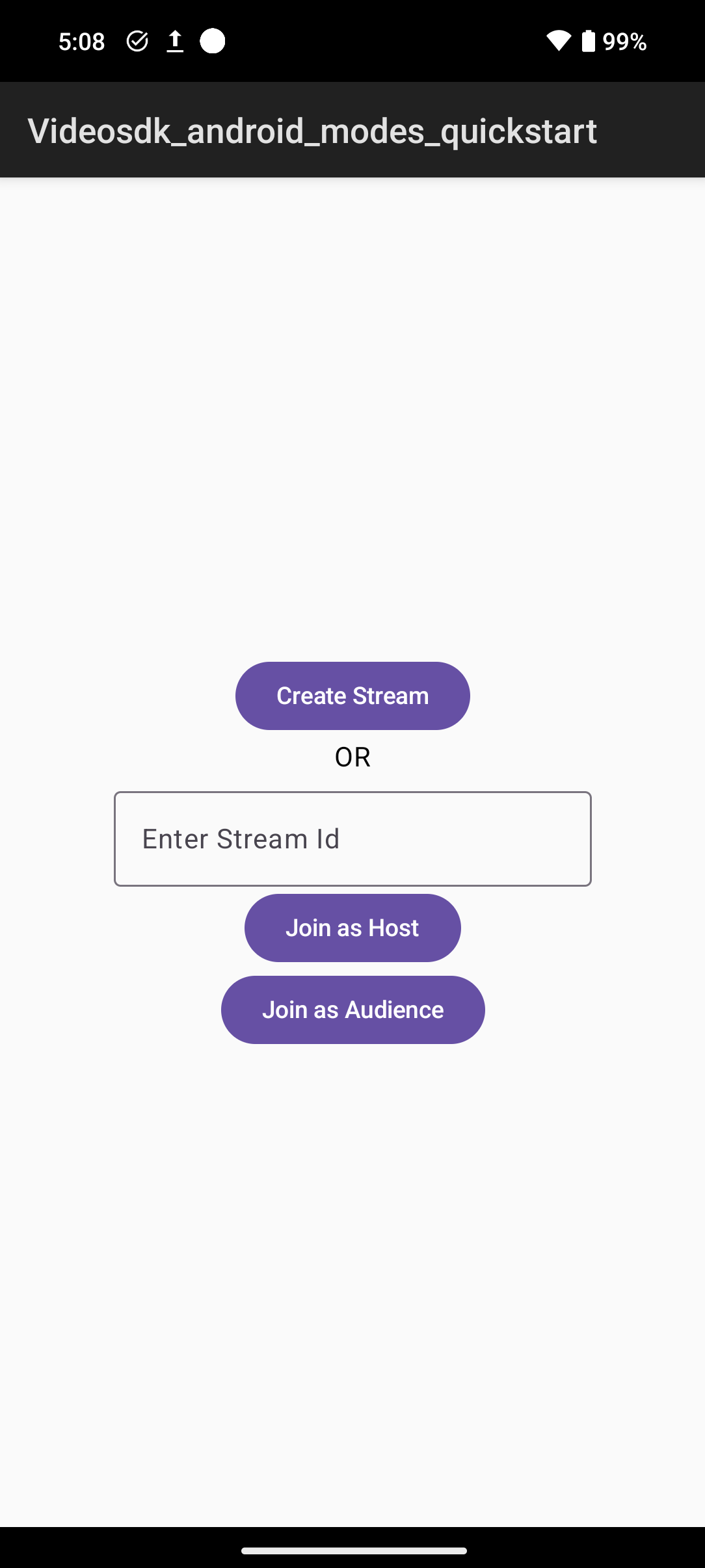

Step 4: Creating Joining Screen

Join screen will serve as a medium to either Create a new Live Stream or join an existing one as a host or a audience.

We will create a simple UI for the Join screen that includes three action buttons for joining or creating a Live Stream:

Create Stream: Lets the user initiate a new Live Stream in SEND_AND_RECV mode, granting full host controls.Join as Host: Allows the user to join an existing Live Stream using the provided meetingId with the SEND_AND_RECV mode, enabling full host privileges.Join as Audience: Enables the user to join an existing Live Stream using the provided meetingId with the RECV_ONLY mode, allowing view-only access.

@Composable

fun JoinScreen(

navController: NavController, context: Context

) {

val app = context.applicationContext as MainApplication

val token = app.sampleToken

Box(

modifier = Modifier.fillMaxSize()

.padding(8.dp),

contentAlignment = Alignment.Center

) {

Column(

modifier = Modifier.padding(4.dp),

horizontalAlignment = Alignment.CenterHorizontally,

verticalArrangement = Arrangement.SpaceEvenly

) {

var input by rememberSaveable { mutableStateOf("") }

CreateStreamBtn(navController, token)

Text(text = "OR")

InputMeetingId(input) { updateInput -> input = updateInput }

JoinHostBtn(navController, input)

JoinAudienceBtn(navController, input)

}

}

}

@Composable

fun JoinHostBtn(navController: NavController, streamId: String) {

MyAppButton({

if (streamId.isNotEmpty()) {

navController.navigate("stream_screen?streamId=$streamId&mode=${StreamingMode.SendAndReceive.name}")

}

}, "Join as Host")

}

@Composable

fun JoinAudienceBtn(navController: NavController, streamId: String) {

MyAppButton({

if (streamId.isNotEmpty()) {

navController.navigate("stream_screen?streamId=$streamId&mode=${StreamingMode.ReceiveOnly.name}")

}

}, "Join as Audience")

}

@Composable

fun CreateStreamBtn(navController: NavController, token: String) {

MyAppButton({

NetworkManager.createStreamId(token) { streamId ->

navController.navigate("stream_screen?streamId=$streamId&mode=${StreamingMode.SendAndReceive.name}")

}

}, "Create Stream")

}

@Composable

fun InputMeetingId(input: String, onInputChange: (String) -> Unit) {

OutlinedTextField(value = input,

onValueChange = onInputChange,

label = { Text(text = "Enter Stream Id") })

}

Output

Step 5: Creating StreamId

The NetworkManager singleton handles stream creation by making a POST request to the VideoSDK API:

- stream Creation: Sends a request to the API with an authorization token to create a stream.

- Response Handling: Extracts the roomId from the response and triggers the

onMeetingCreatedcallback.

object NetworkManager {

fun createStreamId(token: String, onStreamIdCreated: (String) -> Unit) {

AndroidNetworking.post("https://dev-api.videosdk.live/v2/rooms")

.addHeaders("Authorization", token)

.build()

.getAsJSONObject(object : JSONObjectRequestListener {

override fun onResponse(response: JSONObject) {

try {

val streamId = response.getString("roomId")

onStreamIdCreated(streamId)

} catch (e: JSONException) {

e.printStackTrace()

}

}

override fun onError(anError: ANError) {

anError.printStackTrace()

Log.d("TAG", "onError: $anError")

}

})

}

}

Don't confuse with Room and stream keyword, both are same thing 😃

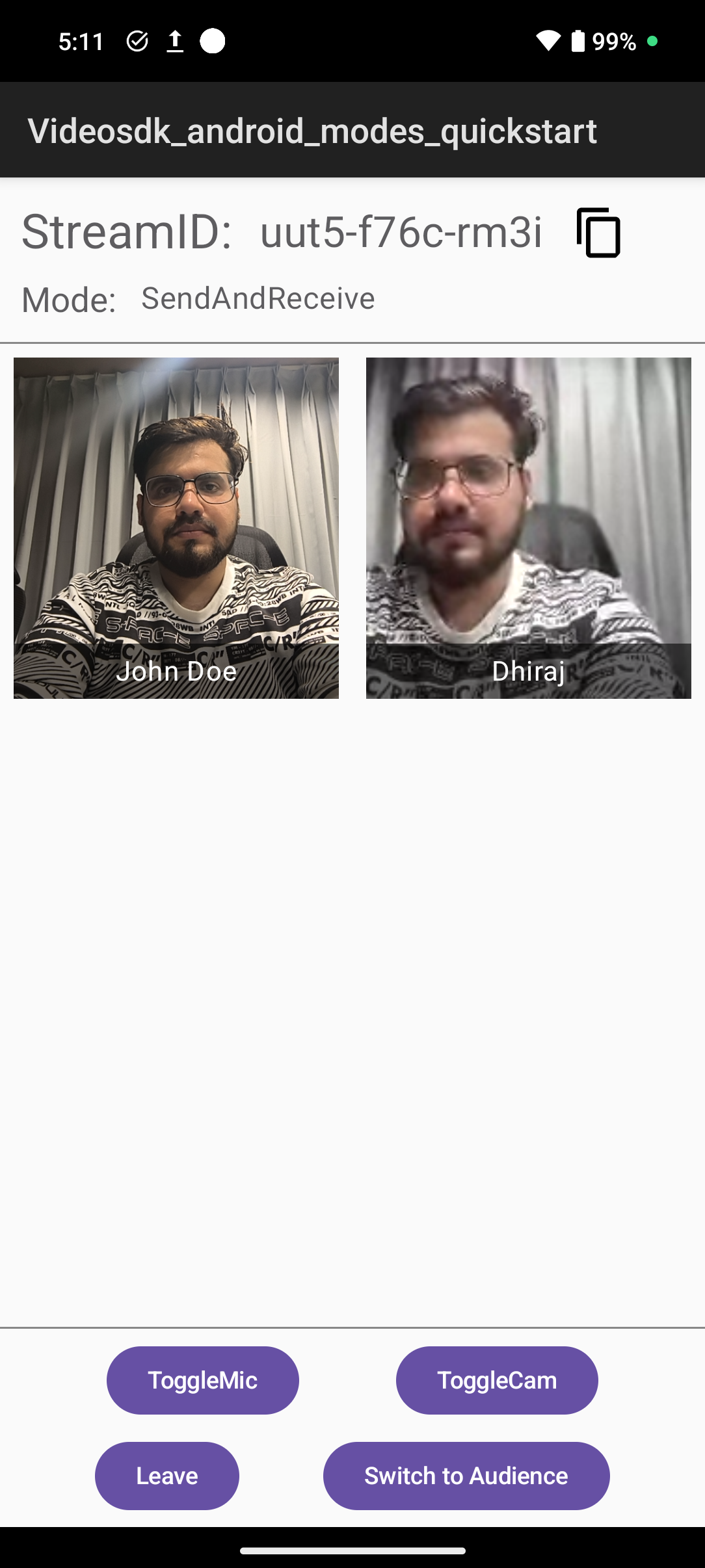

Step 6: Creating Streaming Screen

We will create a simple Streaming screen UI with two main sections:

Header: Displays the stream ID and current streaming mode at the top of the screen for reference.ParticipantsGrid: Shows the list of participants in a grid layout, adjusting dynamically.

In Step 3, we created the ParticipantsGrid component.

@Composable

fun StreamingScreen(viewModel: StreamViewModel, navController: NavController,

streamId: String,

mode: StreamingMode,

context: Context

) {

val app = context.applicationContext as MainApplication

val isStreamLeft = viewModel.isStreamLeft

val currentMode = viewModel.currentMode

val isJoined = viewModel.isJoined

LaunchedEffect(isStreamLeft) {

if (isStreamLeft) {

navController.navigate("join_screen") }

}

Column(modifier = Modifier.fillMaxSize()) {

Header(streamId, currentMode)

MySpacer()

ParticipantsGrid(

participants = viewModel.currentParticipants,

modifier = Modifier.weight(1f)

)

MySpacer()

MediaControlButtons(

onJoinClick = { viewModel.initStream(context, app.sampleToken, streamId, mode)},

onMicClick = { viewModel.toggleMic() },

onCamClick = { viewModel.toggleWebcam()},

onModeToggleClick = { viewModel.toggleMode() },

showMediaControls = currentMode == StreamingMode.SendAndReceive,

currentMode = currentMode,

onLeaveClick = { viewModel.leaveStream() },

isJoined = isJoined )

}

}

@Composable

fun Header(streamId: String, mode: StreamingMode) {

val context = LocalContext.current

val clipboardManager = context.getSystemService(Context.CLIPBOARD_SERVICE) as ClipboardManager

Box(modifier = Modifier.fillMaxWidth()

.padding(8.dp),

contentAlignment = Alignment.TopStart

) {

Row( modifier = Modifier.fillMaxWidth(),

horizontalArrangement = Arrangement.SpaceBetween,

verticalAlignment = Alignment.CenterVertically

) {

Column {

Row(verticalAlignment = Alignment.CenterVertically) {

MyText("StreamID: ", 28.sp)

MyText(streamId, 25.sp)

IconButton( onClick = {

val clip = ClipData.newPlainText("Stream ID", streamId)

clipboardManager.setPrimaryClip(clip)

})

{

//get baseline_content_copy_24 icon from Vector Asset

Icon( painter = painterResource(R.drawable.baseline_content_copy_24),

contentDescription = "Copy Stream ID",

modifier = Modifier.size(40.dp).padding(start = 8.dp)

)

}

}

Row {

MyText("Mode: ", 20.sp)

MyText(mode.name, 18.sp)

}

}

}

}

}

//...

Step 7: Implementing Mode Switch and Media Toggles

Implement interactive controls to leave the stream, toggle microphone and webcam, and switch between Host (SEND_AND_RECV) and Audience (RECV_ONLY) modes for a seamless experience.

@Composable

fun MediaControlButtons(

onJoinClick: () -> Unit,

onMicClick: () -> Unit,

onCamClick: () -> Unit,

onModeToggleClick: () -> Unit,

onLeaveClick: () -> Unit,

showMediaControls: Boolean = true,

currentMode: StreamingMode,

isJoined: Boolean

) {

Column( modifier = Modifier.fillMaxWidth()

.padding(6.dp),

horizontalAlignment = Alignment.CenterHorizontally,

verticalArrangement = Arrangement.spacedBy(8.dp)

) {

Row( modifier = Modifier.fillMaxWidth(),

horizontalArrangement = Arrangement.SpaceEvenly,

verticalAlignment = Alignment.CenterVertically

) {

if (!isJoined) {

MyAppButton(onJoinClick, "Join")

}

if (showMediaControls && isJoined) { // Only show media controls if joined

MyAppButton(onMicClick, "ToggleMic")

MyAppButton(onCamClick, "ToggleCam")

}

}

Row( modifier = Modifier.fillMaxWidth(),

horizontalArrangement = Arrangement.SpaceEvenly,

verticalAlignment = Alignment.CenterVertically

) {

if (isJoined) {

MyAppButton(onLeaveClick, "Leave")

MyAppButton(

task = onModeToggleClick,

buttonName = if (currentMode == StreamingMode.SendAndReceive)

"Switch to Audience"

else

"Switch to Host"

)

}

}

}

}

Step 8: Setting Up StreamViewModel

The StreamViewModel manages the core functionality of Interactive Live Streaming (ILS), serving as the central state manager:

-

Stream State Management

- Handles stream initialization with appropriate mode (Conference/Audience)

- Tracks stream lifecycle (joined, left, current mode)

-

Media Control Integration

- Provides microphone toggle (mute/unmute)

- Controls webcam state (enable/disable)

-

Mode Switching

- Enables seamless transitions between Conference and Audience modes

class StreamViewModel : ViewModel() {

private var stream: Meeting? = null

private var micEnabled by mutableStateOf(true)

private var webcamEnabled by mutableStateOf(true)

var isConferenceMode by mutableStateOf(true)

val currentParticipants = mutableStateListOf<Participant>()

var localParticipantId by mutableStateOf("")

private set

var isStreamLeft by mutableStateOf(false)

private set

var currentMode by mutableStateOf(StreamingMode.SendAndReceive)

private set

var isJoined by mutableStateOf(false)

private set

fun initStream(context: Context, token: String, streamId: String, mode: StreamingMode) {

VideoSDK.config(token)

currentMode = mode

if (stream == null) {

stream = if (mode.name == StreamingMode.SendAndReceive.name) {

VideoSDK.initMeeting(

context,"54dr-h8c5-hsif", "John Doe",

micEnabled, webcamEnabled, null, null, true, null, null

)

} else {

VideoSDK.initMeeting(

context, "54dr-h8c5-hsif", "John Doe",

micEnabled, webcamEnabled, null, "RECV_ONLY", true, null, null

)

}

}

stream!!.addEventListener(meetingEventListener)

stream!!.join()

isJoined = true

}

private val meetingEventListener: MeetingEventListener = object : MeetingEventListener() {

override fun onMeetingJoined() {

stream?.let {

if (it.localParticipant.mode != "RECV_ONLY") {

if (!currentParticipants.contains(it.localParticipant)) {

currentParticipants.add(it.localParticipant)

}

}

localParticipantId = it.localParticipant.id

}

}

override fun onMeetingLeft() {

currentParticipants.clear()

stream = null

isStreamLeft = true

}

override fun onParticipantJoined(participant: Participant) {

if (participant.mode != "RECV_ONLY") {

currentParticipants.add(participant)

}

}

override fun onParticipantLeft(participant: Participant) {

currentParticipants.remove(participant)

}

override fun onParticipantModeChanged(data: JSONObject?) {

try {

val participantId = data?.getString("peerId")

val participant = if (stream?.localParticipant?.id == participantId) {

stream?.localParticipant

} else {

stream?.participants?.get(participantId)

}

participant?.let {

when (it.mode) {

"RECV_ONLY" -> {

currentParticipants.remove(it)

}

"SEND_AND_RECV" -> {

if (!currentParticipants.contains(it)) {

currentParticipants.add(it)

} else { }

}

else -> {}

}

}

} catch (e: JSONException) {

e.printStackTrace()

}

}

}

fun toggleMic() {

if (micEnabled) stream?.muteMic() else stream?.unmuteMic()

micEnabled = !micEnabled

}

fun toggleWebcam() {

if (webcamEnabled) stream?.disableWebcam() else stream?.enableWebcam()

webcamEnabled = !webcamEnabled

}

fun leaveStream() {

currentParticipants.clear()

stream?.leave()

stream?.removeAllListeners()

isStreamLeft = true

isJoined = false

}

fun toggleMode() {

val newMode = if (currentMode == StreamingMode.SendAndReceive) {

stream?.disableWebcam()

stream?.changeMode("RECV_ONLY")

isConferenceMode = false

StreamingMode.ReceiveOnly

} else {

stream?.changeMode("SEND_AND_RECV")

isConferenceMode = true

StreamingMode.SendAndReceive

}

// Clear all participants first

currentParticipants.clear()

// Wait briefly for mode change to complete

stream?.let { it ->

// Add back all non-viewer participants

it.participants.values.forEach { participant ->

if (participant.mode != "RECV_ONLY") {

currentParticipants.add(participant)

}

}

// Add local participant only if in SEND_AND_RECV mode

if (newMode == StreamingMode.SendAndReceive) {

it.localParticipant?.let { localParticipant ->

if (!currentParticipants.contains(localParticipant)) {

currentParticipants.add(localParticipant)

}

}

// Re-enable webcam if it was enabled before

if (webcamEnabled) {

it.enableWebcam() }

}

}

currentMode = newMode

}

}

Final Output

We are done with implementation of customised video calling app in Android using Video SDK. To explore more features go through Basic and Advanced features.

You can checkout the complete quick start example here.

Got a Question? Ask us on discord