Rendering Host and Audience Views - Flutter

In a live stream setup, only hosts (participants in SEND_AND_RECV mode) can broadcast their audio and video. Audience members (in RECV_ONLY mode) are passive viewers who do not share their audio/video.

To ensure optimal performance and a clean user experience, your app should:

- Render audio and video elements only for hosts (i.e., participants in

SEND_AND_RECVmode). - Display the total audience count to give context on viewership without rendering individual audience tiles.

Filtering and Rendering Hosts

The steps involved in rendering the video of hosts are as follows.

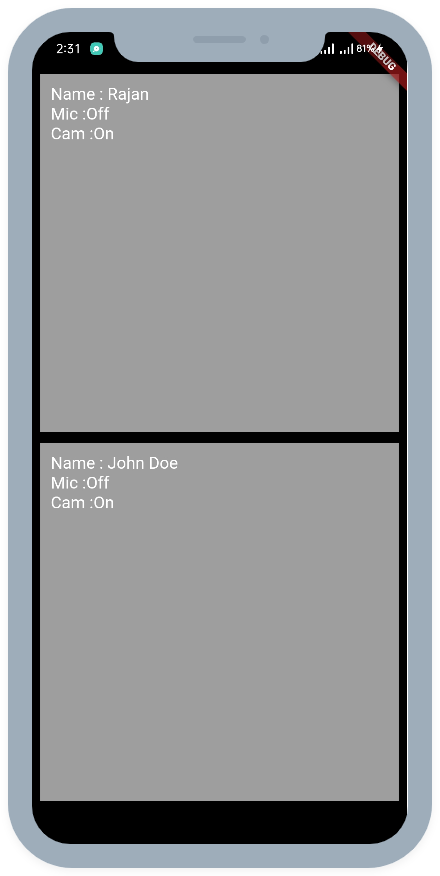

1. Filtering Hosts and Checking their Mic and Cam Status

In a live stream, only participants in SEND_AND_RECV mode (i.e., hosts) actively share their audio and video. To render their video streams, begin by accessing all participants using the Room class. Then, filter out only those in SEND_AND_RECV mode.

For each of these participants, use the Participant class, which provides real-time information like displayName, micOn, and webcamOn. Display their name along with the current status of their microphone and Camera. If the camera is off, show a simple placeholder with their name. If it's on, render their video feed. This ensures only hosts are visible to the audience, keeping the experience clean and intentional.

import 'package:flutter/material.dart';

import 'package:videosdk/videosdk.dart';

class LiveStreamGrid extends StatelessWidget {

final Map<String, Participant> participants;

final Participant localParticipant;

const LiveStreamGrid(

{Key? key, required this.participants, required this.localParticipant})

: super(key: key);

@override

Widget build(BuildContext context) {

// Filter only SEND_AND_RECV participants (hosts)

final hostParticipants = [

if (localParticipant.mode == Mode.SEND_AND_RECV) localParticipant,

...participants.values.where((participant) {

return participant.mode == Mode.SEND_AND_RECV &&

participant.id != localParticipant.id;

}),

];

return GridView.builder(

padding: const EdgeInsets.all(8),

gridDelegate: const SliverGridDelegateWithFixedCrossAxisCount(

crossAxisCount: 3,

crossAxisSpacing: 12,

mainAxisSpacing: 12,

childAspectRatio: 0.75,

),

itemCount: hostParticipants.length,

itemBuilder: (context, index) {

final participant = hostParticipants[index];

final isLocal = participant.id == localParticipant.id;

return ParticipantViewTile(

participant: participant,

isLocal: isLocal,

);

});

}

}

class ParticipantViewTile extends StatelessWidget {

final Participant participant;

final bool isLocal;

const ParticipantViewTile({

Key? key,

required this.participant,

this.isLocal = false,

}) : super(key: key);

@override

Widget build(BuildContext context) {

final micOn =

participant.streams.values.any((stream) => stream.kind == 'audio');

final camOn =

participant.streams.values.any((stream) => stream.kind == 'video');

return Container(

padding: const EdgeInsets.all(12),

decoration: BoxDecoration(

color: Colors.grey[400],

borderRadius: BorderRadius.circular(10),

),

child: Column(

crossAxisAlignment: CrossAxisAlignment.start,

children: [

Text(

isLocal ? "You" : participant.displayName,

style: const TextStyle(fontSize: 16, fontWeight: FontWeight.bold),

),

const SizedBox(height: 10),

Text("Cam: ${camOn ? 'On' : 'Off'}"),

Text("Mic: ${micOn ? 'On' : 'Off'}"),

],

),

);

}

}

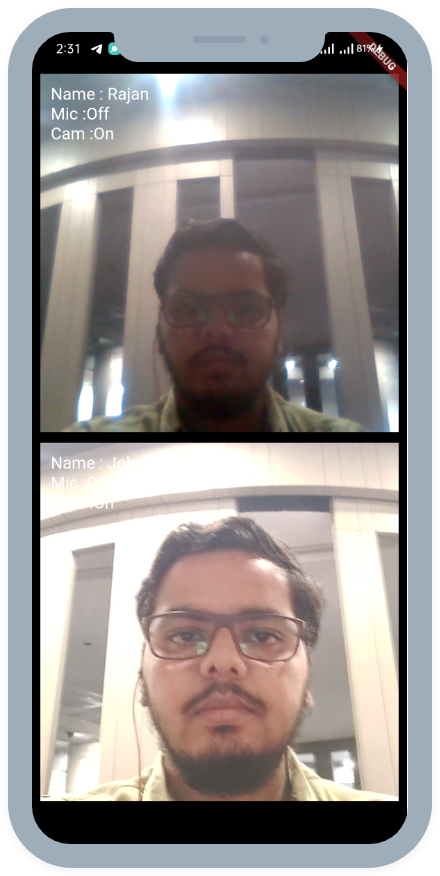

2. Rendering Video Streams of Hosts

Once you've filtered for participants in SEND_AND_RECV mode (i.e., hosts), you can use the Participant class to access their real-time data, including their CamStream, CamOn, and whether they are the local participant.

class ParticipantViewTile extends StatefulWidget {

final Participant participant;

final bool isLocal;

const ParticipantViewTile({

Key? key,

required this.participant,

this.isLocal = false,

}) : super(key: key);

@override

State<ParticipantViewTile> createState() => _ParticipantViewTileState();

}

class _ParticipantViewTileState extends State<ParticipantViewTile> {

Stream? videoStream;

Stream? audioStream;

@override

void initState() {

super.initState();

_initStreamListeners();

// Initial stream assignment

widget.participant.streams.forEach((key, stream) {

if (stream.kind == 'video') {

setState(() {

videoStream = stream;

});

} else if (stream.kind == 'audio') {

setState(() {

audioStream = stream;

});

}

});

}

void _initStreamListeners() {

widget.participant.on(Events.streamEnabled, (Stream stream) {

setState(() {

if (stream.kind == 'video') {

videoStream = stream;

} else if (stream.kind == 'audio') {

audioStream = stream;

}

});

});

widget.participant.on(Events.streamDisabled, (Stream stream) {

setState(() {

if (stream.kind == 'video' && videoStream?.id == stream.id) {

videoStream = null;

} else if (stream.kind == 'audio' && audioStream?.id == stream.id) {

audioStream = null;

}

});

});

widget.participant.on(Events.streamPaused, (Stream stream) {

setState(() {

if (stream.kind == 'video' && videoStream?.id == stream.id) {

videoStream = null;

}

});

});

widget.participant.on(Events.streamResumed, (Stream stream) {

setState(() {

if (stream.kind == 'video') {

videoStream = stream;

}

});

});

}

@override

Widget build(BuildContext context) {

return Container(

decoration: BoxDecoration(

color: Colors.black87,

borderRadius: BorderRadius.circular(10),

),

child: Stack(

children: [

if (videoStream != null)

ClipRRect(

borderRadius: BorderRadius.circular(10),

child: RTCVideoView(

videoStream?.renderer as RTCVideoRenderer,

objectFit: RTCVideoViewObjectFit.RTCVideoViewObjectFitCover,

),

)

else

Center(

child: Container(

width: 60,

height: 60,

decoration: const BoxDecoration(

color: Colors.grey,

shape: BoxShape.circle,

),

alignment: Alignment.center,

child: Text(

widget.participant.displayName.characters.first.toUpperCase(),

style: const TextStyle(

color: Colors.white,

fontSize: 24,

),

),

),

),

Positioned(

bottom: 8,

left: 8,

child: Container(

padding: const EdgeInsets.all(6),

decoration: BoxDecoration(

color: Colors.black.withOpacity(0.6),

borderRadius: BorderRadius.circular(6),

),

child: Column(

crossAxisAlignment: CrossAxisAlignment.start,

children: [

Text(

widget.isLocal ? "You" : widget.participant.displayName,

style: const TextStyle(color: Colors.white, fontSize: 14),

),

Text(

"Cam: ${videoStream != null ? 'On' : 'Off'} | Mic: ${audioStream != null ? 'On' : 'Off'}",

style: const TextStyle(color: Colors.white, fontSize: 12),

),

],

),

),

),

],

),

);

}

}

Unlike before, you don't need to render audio separately because RTCView is a component that handles audio stream automatically.

API Reference

The API references for all the methods and events utilized in this guide are provided below.

Got a Question? Ask us on discord