Quick Start for Interactive Live Streaming in iOS

VideoSDK empowers you to seamlessly integrate interactive live streaming features into your iOS application in minutes. While built for meetings, the SDK is easily adaptable for live streaming with support for up to 100 hosts/co-hosts and 2,000 viewers in real-time. Perfect for social use cases, this guide will walk you through integrating live streaming into your app.

Prerequisites

- iOS 13.0+

- Xcode 15.0+

- Swift 5.0+

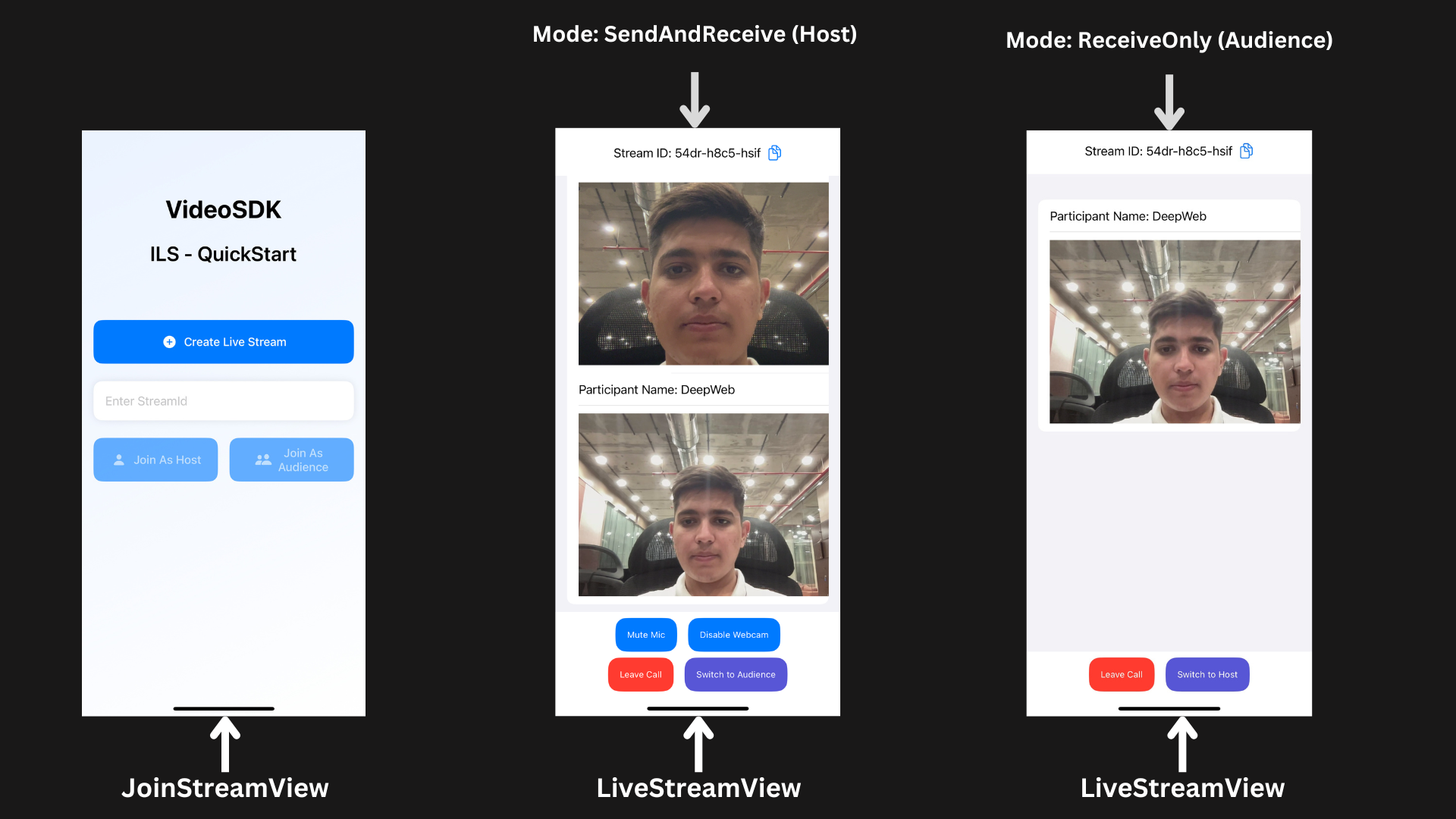

App Architecture

The application consists of two primary interfaces:

Join Stream View

- Allows users to create a new Live Stream or join an existing one with a stream ID and select their mode (Host or Audience)

Live Stream View

- Provides Live Stream controls and adapts the UI dynamically based on the user's mode (Host or Audience).

Getting Started With the Code!

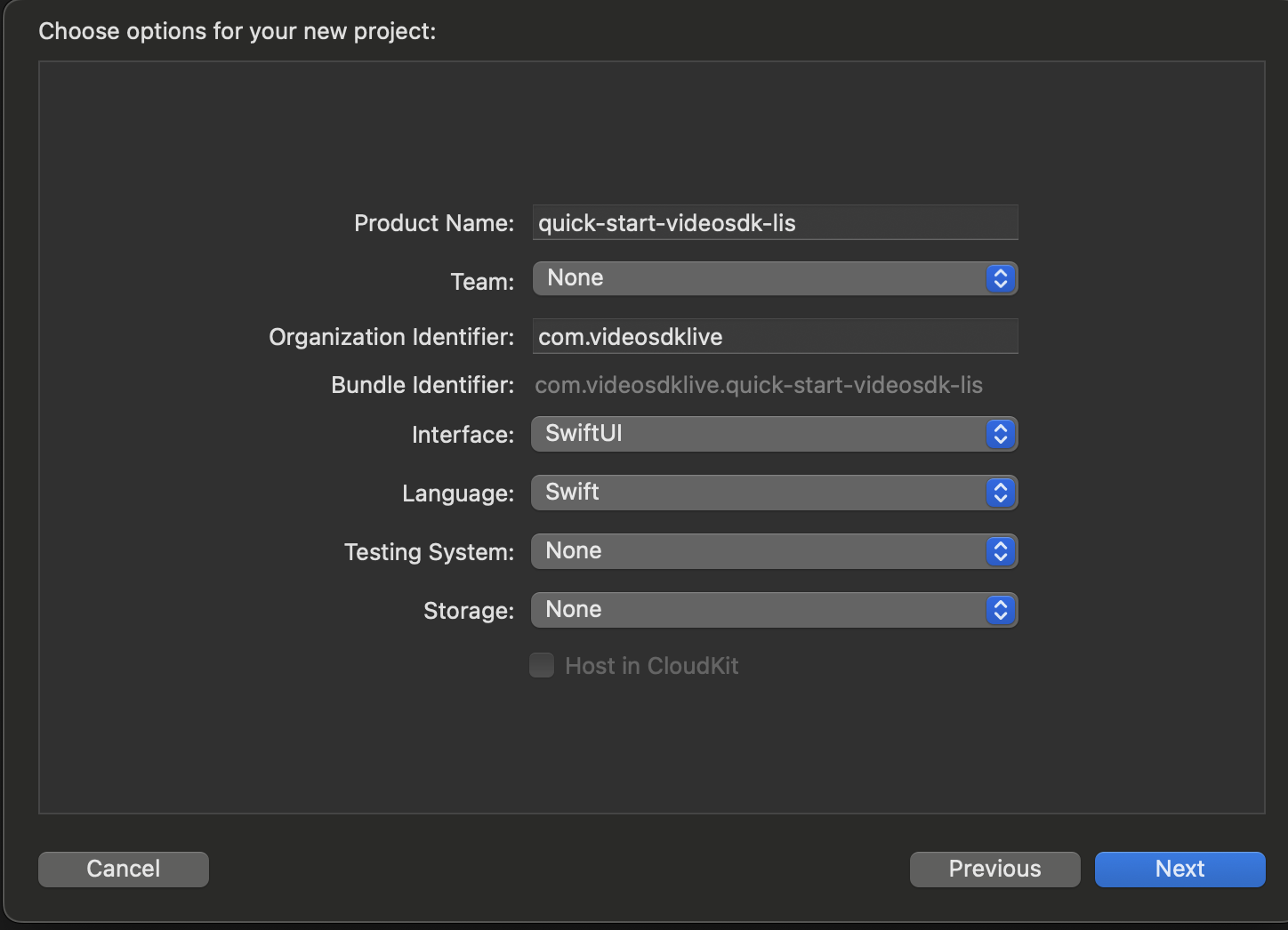

Step 1: Create New iOS Application

Step 1: Create a new application by selecting Create a new Xcode project

Step 2: Add Product Name and Save the project.

Step 2: VideoSDK Installation

There are two ways to install VideoSDK: Using Swift Package Manager (SPM) or Using CocoaPods.

1. Install Using Swift Package Manager (SPM)

To install VideoSDK via Swift Package Manager, follow these steps:

- Open your Xcode project and go to File > Add Packages.

- Enter the repository URL:

https://github.com/videosdk-live/videosdk-rtc-ios-spm

- Choose the version rule (e.g., "Up to Next Major") and add the package to your target.

- Import the library in Swift files:

import VideoSDKRTC

For more details, refer to the official guide on SPM installation.

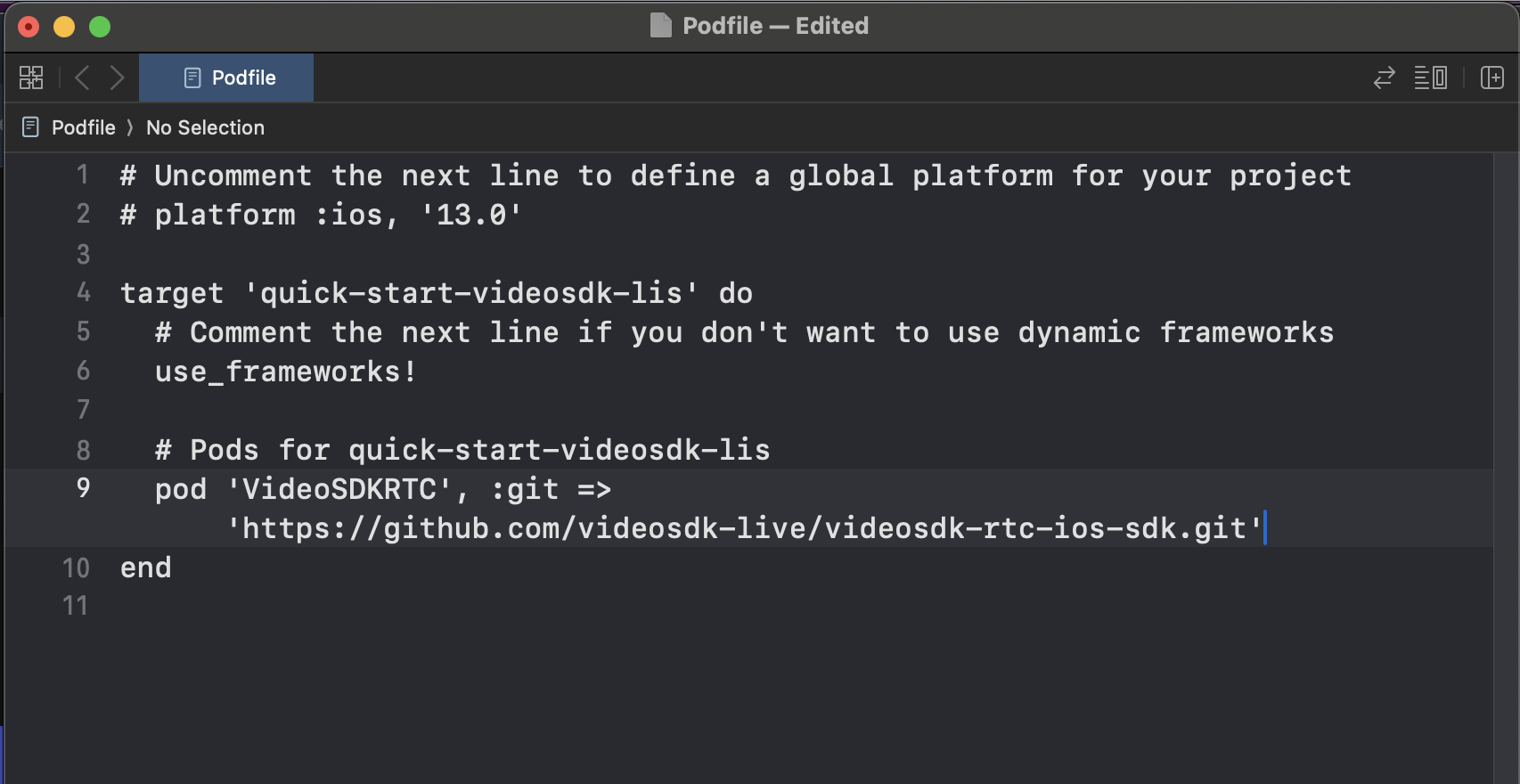

2. Install Using CocoaPods

To install VideoSDK using CocoaPods, follow these steps:

- Initialize CocoaPods: Run the following command in your project directory:

pod init

- Update the Podfile: Open the Podfile and add the VideoSDK dependency:

pod 'VideoSDKRTC', :git => 'https://github.com/videosdk-live/videosdk-rtc-ios-sdk.git'

- Install the Pod: Run the following command to install the pod:

pod install

For more details, refer to the official guide on CocoaPods installation.

then declare the permissions in Info.plist :

<key>NSCameraUsageDescription</key>

<string>Camera permission description</string>

<key>NSMicrophoneUsageDescription</key>

<string>Microphone permission description</string>

Step 3: Project Structure

quick-start-videosdk-lis

├── quick-start-videosdk-lis.swift // Default

├── Screens

├── JoinStreamView

└── JoinStreamView.swift

├── LiveStreamView

└── LiveStreamView.swift

└── LiveStreamViewController.swift

└── Info.plist // Default

Pods

└── Podfile

Step 4: Create JoinStream View

The JoinStreamView acts as the entry point for users to initiate or participate in Live Streams with the following functionalities:

-

Create Live Stream as Host: Start a new Live Stream in

SEND_AND_RECVmode, providing full host privileges. -

Join as Host: Enter an existing Live Stream using a stream ID with

SEND_AND_RECVmode, granting full host controls. -

Join as Audience: Join an existing Live Stream using a stream ID with

RECV_ONLYmode, offering view-only access.

- Swift

import SwiftUI

struct JoinStreamView: View {

@State var streamId: String

@State var name: String

var body: some View {

NavigationView {

ZStack {

// Background gradient

LinearGradient(colors: [.blue.opacity(0.1), .white],

startPoint: .topLeading,

endPoint: .bottomTrailing)

.ignoresSafeArea()

VStack(spacing: 24) {

Text("VideoSDK")

.font(.largeTitle)

.fontWeight(.bold)

.padding(.top,80)

Text("ILS - QuickStart")

.font(.title)

.fontWeight(.semibold)

.padding(.bottom,50)

// Create Room Button

HStack {

NavigationLink(

destination: LiveStreamView(userName: name ?? "Gust", mode: .SEND_AND_RECV)

.navigationBarBackButtonHidden(true)

) {

ActionButton(title: "Create Live Stream", icon: "plus.circle.fill")

}

.padding(.horizontal)

}

// Stream ID input

HStack {

TextField("Enter StreamId", text: $streamId)

.textFieldStyle(.plain)

.autocorrectionDisabled()

if !streamId.isEmpty {

Button(action: { streamId = "" }) {

Image(systemName: "xmark.circle.fill")

.foregroundColor(.gray)

}

}

}

.padding()

.background(

RoundedRectangle(cornerRadius: 12)

.fill(.white)

.shadow(color: .black.opacity(0.1), radius: 5)

)

.padding(.horizontal)

// Buttons Stack

VStack(spacing: 16) {

HStack(spacing: 16) {

NavigationLink(destination: {

if !streamId.isEmpty {

LiveStreamView(streamId: streamId,

userName: name ?? "Guest",

mode: mode)

.navigationBarBackButtonHidden(true)

}

}) {

ActionButton(title: "Join As Host", icon: "person.fill")

.opacity(streamId.isEmpty ? 0.6 : 1)

}

.disabled(streamId.isEmpty)

NavigationLink(

destination: LiveStreamView(

streamId: streamId,

userName: name.isEmpty ? "Guest" : name,

mode: .RECV_ONLY

)

.navigationBarBackButtonHidden(true)

) {

ActionButton(title: "Join As Audience", icon: "person.2.fill")

.opacity(streamId.isEmpty ? 0.6 : 1)

}

.disabled(streamId.isEmpty)

}

}

.padding(.horizontal)

Spacer()

}

}

}

}

}

struct ActionButton: View {

let title: String

let icon: String

var body: some View {

HStack {

Image(systemName: icon)

.frame(width: 24, height: 24)

Text(title)

.font(.system(size: 16))

.fontWeight(.medium)

.multilineTextAlignment(.center)

.lineLimit(2)

}

.frame(maxWidth: .infinity, minHeight: 60)

.padding(.horizontal)

.background(

RoundedRectangle(cornerRadius: 12)

.fill(Color.blue)

)

.foregroundColor(.white)

}

}

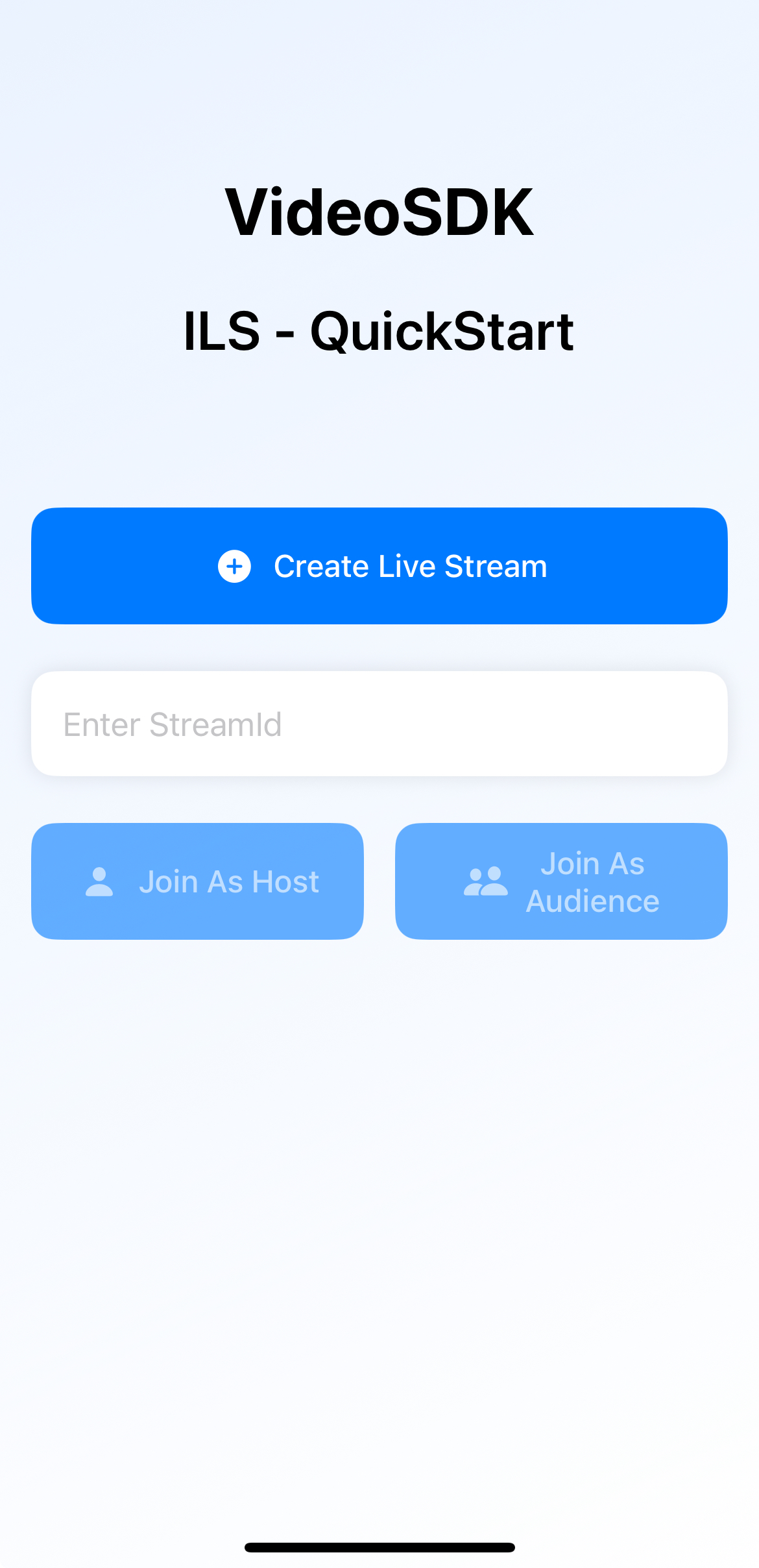

Output

Also add the JoinStreamView in main app as shown below

- Swift

import SwiftUI

@main

struct quick_start_videosdk_lis: App {

var body: some Scene {

WindowGroup {

JoinStreamView(streamId: "", name: "")

}

}

}

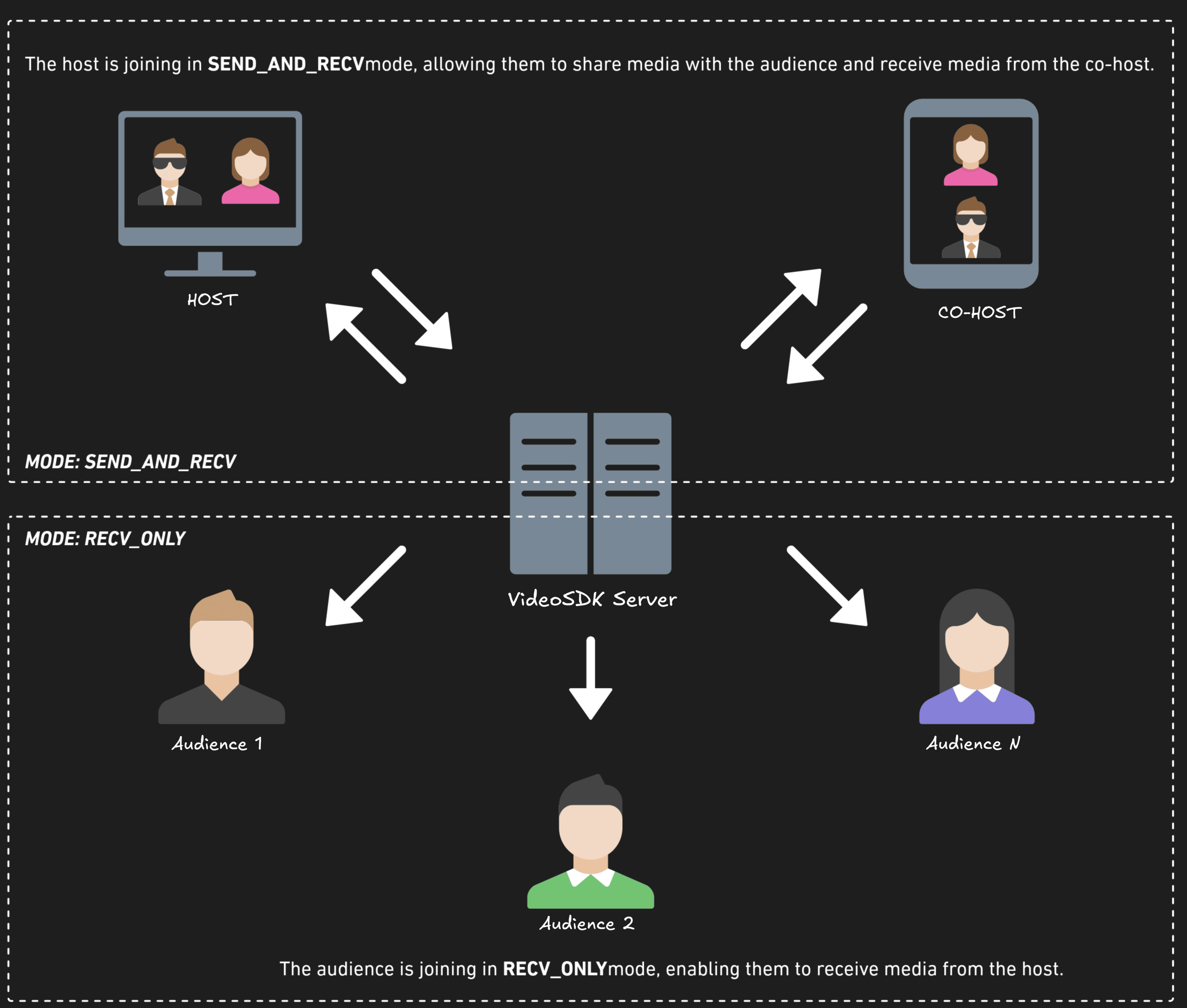

Before proceeding, let's understand the two modes of a Live Stream:

1. SEND_AND_RECV (For Host or Co-host):

- Designed primarily for the Host or Co-host.

- Allows sending and receiving media.

- Hosts can broadcast their audio/video and interact directly with the audience.

2. RECV_ONLY (For Audience):

- Tailored for the Audience.

- Enables receiving media shared by the Host.

- Audience members can view and listen but cannot share their own media.

Step 5: Initialize and Join the Live Stream

In this step, Inside LiveStreamViewController we will setup initializeStream and related functions for it. You will require Auth token, you can generate it using either using videosdk-server-api-example or generate it from the Video SDK Dashboard for developer.

LiveStreamViewController will implement various event listeners such as MeetingEventListener, ParticipantEventListener.

- Swift

import Foundation

import VideoSDKRTC

struct RoomStruct: Codable {

let roomID: String?

enum CodingKeys: String, CodingKey {

case roomID = "roomId"

}

}

class LiveStreamViewController: ObservableObject {

var token = "YOUR_TOKEN"

var streamId: String = ""

var name: String = ""

@Published var meeting: Meeting? = nil

@Published var localParticipantView: VideoView? = nil

@Published var videoTrack: RTCVideoTrack?

@Published var participants: [Participant] = []

@Published var streamID: String = ""

func initializeStream(streamId: String, userName: String, mode: Mode) {

meeting = VideoSDK.initMeeting(

meetingId: streamId,

participantName: userName,

micEnabled: true,

webcamEnabled: true,

mode: mode // Pass the mode here

)

// Join the stream

meeting?.join()

// Add event listeners

meeting?.addEventListener(self)

}

}

extension LiveStreamViewController: MeetingEventListener {

func onMeetingJoined() {

guard let localParticipant = self.meeting?.localParticipant else { return }

// add to list

participants.append(localParticipant)

// add event listener

localParticipant.addEventListener(self)

}

func onMeetingLeft() {

meeting?.localParticipant.removeEventListener(self)

meeting?.removeEventListener(self)

}

}

extension LiveStreamViewController: ParticipantEventListener {

func onStreamEnabled(_ stream: MediaStream, forParticipant participant: Participant) {

if participant.isLocal {

if let track = stream.track as? RTCVideoTrack {

DispatchQueue.main.async {

self.videoTrack = track

}

}

} else {

if let track = stream.track as? RTCVideoTrack {

DispatchQueue.main.async {

self.videoTrack = track

}

}

}

}

func onStreamDisabled(_ stream: MediaStream, forParticipant participant: Participant) {

if participant.isLocal {

if let _ = stream.track as? RTCVideoTrack {

DispatchQueue.main.async {

self.videoTrack = nil

}

}

} else {

self.videoTrack = nil

}

}

}

extension LiveStreamViewController {

// create a new stream id

func joinRoom(userName: String, mode: Mode) {

let urlString = "https://api.videosdk.live/v2/rooms"

let session = URLSession.shared

let url = URL(string: urlString)!

var request = URLRequest(url: url)

request.httpMethod = "POST"

request.addValue(self.token, forHTTPHeaderField: "Authorization")

session.dataTask(with: request, completionHandler: { (data: Data?, response: URLResponse?, error: Error?) in

if let data = data, let utf8Text = String(data: data, encoding: .utf8)

{

print("UTF =>=>\(utf8Text)") // original server data as UTF8 string

do{

let dataArray = try JSONDecoder().decode(RoomStruct.self,from: data)

DispatchQueue.main.async {

print(dataArray.roomID)

self.streamID = dataArray.roomID!

self.joinStream(streamId: dataArray.roomID!, userName: userName,mode: mode)

}

print(dataArray)

} catch {

print(error)

}

}

}

).resume()

}

// initialise a stream with give stream id (either new or existing)

func joinStream(streamId: String, userName: String, mode: Mode) {

if !token.isEmpty {

// use provided token for the stream

self.streamID = streamId

self.initializeStream(streamId: streamId, userName: userName, mode: mode)

}

else {

print("Auth token required")

}

}

}

Step 6: Create LiveStream View

The LiveStreamView file manages the live streaming interface and adapts to the user's role.

- Swift

import SwiftUI

import VideoSDKRTC

import WebRTC

struct LiveStreamView: View {

@Environment(\.presentationMode) var presentationMode

@ObservedObject var liveStreamViewController = LiveStreamViewController()

@State var streamId: String?

@State var userName: String?

@State var isUnMute: Bool = true

@State var camEnabled: Bool = true

@State private var currentMode: Mode

init(streamId: String? = nil, userName: String? = nil, mode: Mode) {

self.streamId = streamId

self.userName = userName

self._currentMode = State(initialValue: mode)

}

private var isAudienceMode: Bool {

// Derive audience mode from the current participant's mode

if let localParticipant = liveStreamViewController.participants.first(where: { $0.isLocal }) {

return localParticipant.mode == .RECV_ONLY

}

return currentMode == .RECV_ONLY

}

var body: some View {

VStack {

if liveStreamViewController.participants.isEmpty {

Text("Stream Initializing")

} else {

VStack {

// Stream ID Tile

HStack {

Text("Stream ID: \(liveStreamViewController.streamID)")

.padding(.vertical)

Button(action: {

UIPasteboard.general.string = liveStreamViewController.streamID

}) {

Image(systemName: "doc.on.doc")

.foregroundColor(.blue)

}

}

// Participant List

List {

ForEach(liveStreamViewController.participants.indices, id: \.self) { index in

let participant = liveStreamViewController.participants[index]

if participant.mode != .RECV_ONLY {

Text("Participant Name: \(participant.displayName)")

ZStack {

ParticipantView(track: participant.streams.first(where: { $1.kind == .state(value: .video) })?.value.track as? RTCVideoTrack)

.frame(height: 250)

if participant.streams.first(where: { $1.kind == .state(value: .video) }) == nil {

Color.white.opacity(1.0)

.frame(width: UIScreen.main.bounds.width, height: 250)

Text("No media")

}

}

}

}

}

}

}

}

.onAppear {

VideoSDK.config(token: liveStreamViewController.token)

if let streamId = streamId, !streamId.isEmpty {

liveStreamViewController.joinStream(streamId: streamId, userName: userName ?? "Guest", mode: currentMode)

} else {

liveStreamViewController.joinRoom(userName: userName ?? "Guest")

}

}

}

}

/// VideoView for participant's video

class VideoView: UIView {

var videoView: RTCMTLVideoView = {

let view = RTCMTLVideoView()

view.videoContentMode = .scaleAspectFill

view.backgroundColor = UIColor.black

view.clipsToBounds = true

view.frame = CGRect(x: 0, y: 0, width: UIScreen.main.bounds.width, height: 250)

return view

}()

init(track: RTCVideoTrack?) {

super.init(frame: CGRect(x: 0, y: 0, width: UIScreen.main.bounds.width, height: 250))

backgroundColor = .clear

DispatchQueue.main.async {

self.addSubview(self.videoView)

self.bringSubviewToFront(self.videoView)

track?.add(self.videoView)

}

}

required init?(coder: NSCoder) {

fatalError("init(coder:) has not been implemented")

}

}

/// ParticipantView for showing and hiding VideoView

struct ParticipantView: UIViewRepresentable {

var track: RTCVideoTrack?

func makeUIView(context: Context) -> VideoView {

let view = VideoView(track: track)

view.frame = CGRect(x: 0, y: 0, width: 250, height: 250)

return view

}

func updateUIView(_ uiView: VideoView, context: Context) {

if track != nil {

track?.add(uiView.videoView)

} else {

track?.remove(uiView.videoView)

}

}

}

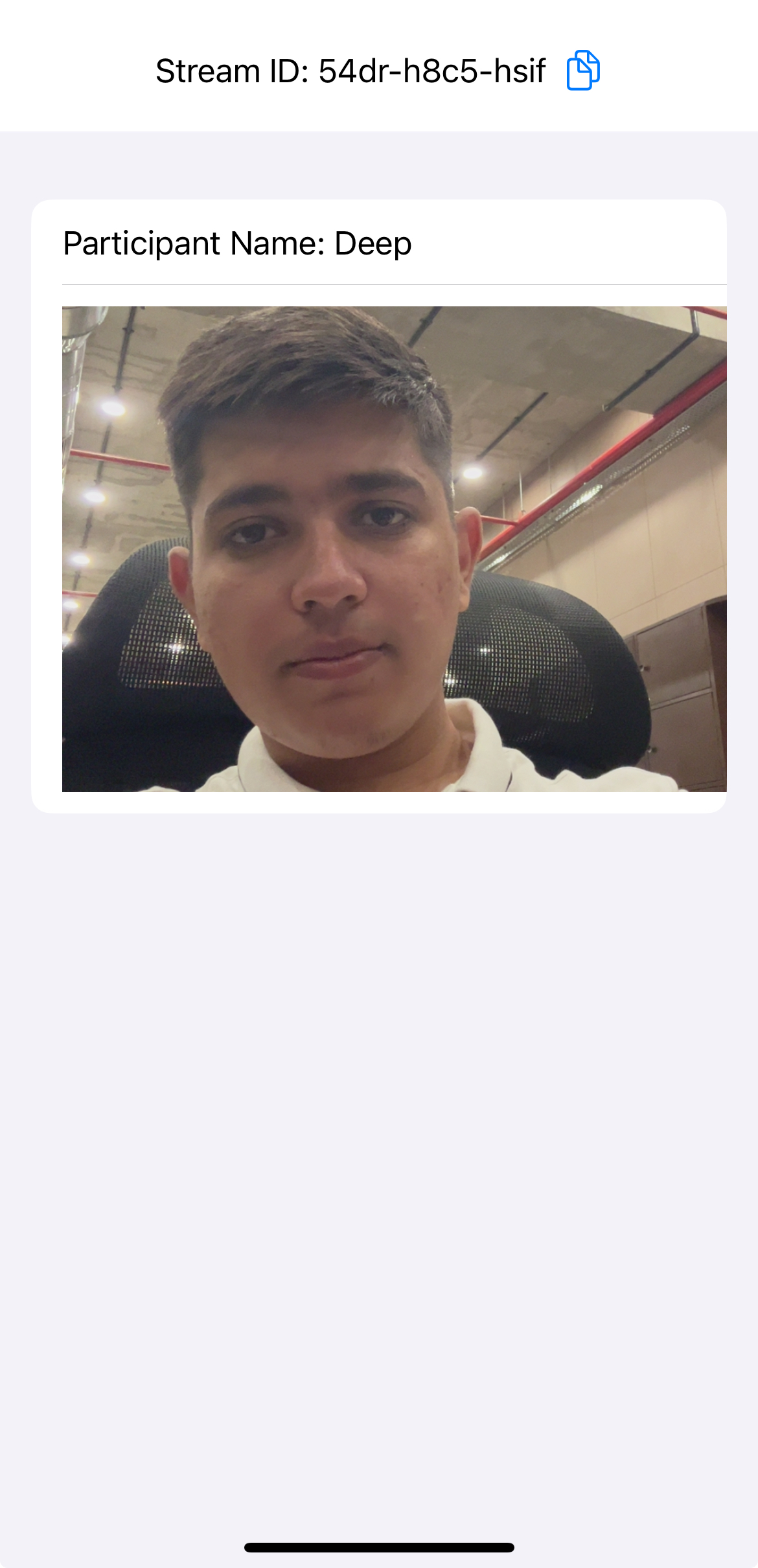

Output

Step 7: Implementing Media Toggles and Mode Switch

Media Control Buttons: Include buttons to toggle the microphone and webcam, allowing users to mute/unmute their microphone and enable/disable their camera or leave the session entirely.

Mode Switching: User can switch between "Host" and "Audience" modes.

- Swift

struct LiveStreamView: View {

var body: some View {

VStack {

if liveStreamViewController.participants.isEmpty {

Text("Stream Initializing")

} else {

VStack {

// ... other code for displaying participants

// Media Control Buttons

if !isAudienceMode {

HStack(spacing: 15) {

// Button to toggle microphone mute/unmute

Button {

isUnMute.toggle()

if isUnMute {

liveStreamViewController.meeting?.unmuteMic()

} else {

liveStreamViewController.meeting?.muteMic()

}

} label: {

ModeButton(

text: isUnMute ? "Mute Mic" : "Unmute Mic",

color: .blue

)

}

// Button to enable/disable webcam

Button {

camEnabled.toggle()

if camEnabled {

liveStreamViewController.meeting?.enableWebcam()

} else {

liveStreamViewController.meeting?.disableWebcam()

}

} label: {

ModeButton(

text: camEnabled ? "Disable Webcam" : "Enable Webcam",

color: .blue

)

}

}

}

// Mode Control Buttons for switching modes

HStack(spacing: 15) {

// Button to leave the call

Button {

liveStreamViewController.meeting?.leave()

presentationMode.wrappedValue.dismiss()

} label: {

ModeButton(text: "Leave Call", color: .red)

}

// Button to switch between audience and host modes

Button {

let newMode: Mode = isAudienceMode ? .SEND_AND_RECV : .RECV_ONLY

liveStreamViewController.meeting?.changeMode(newMode)

currentMode = newMode

} label: {

ModeButton(

text: isAudienceMode ? "Switch to Host" : "Switch to Audience",

color: .indigo

)

}

}

}

}

}

.onAppear {

// code to handle view appearing

}

}

}

// Helper View for consistent button styling

struct ModeButton: View {

let text: String

let color: Color

var body: some View {

Text(text)

.foregroundStyle(Color.white)

.font(.caption)

.padding()

.background(

RoundedRectangle(cornerRadius: 25)

.fill(color)

)

}

}

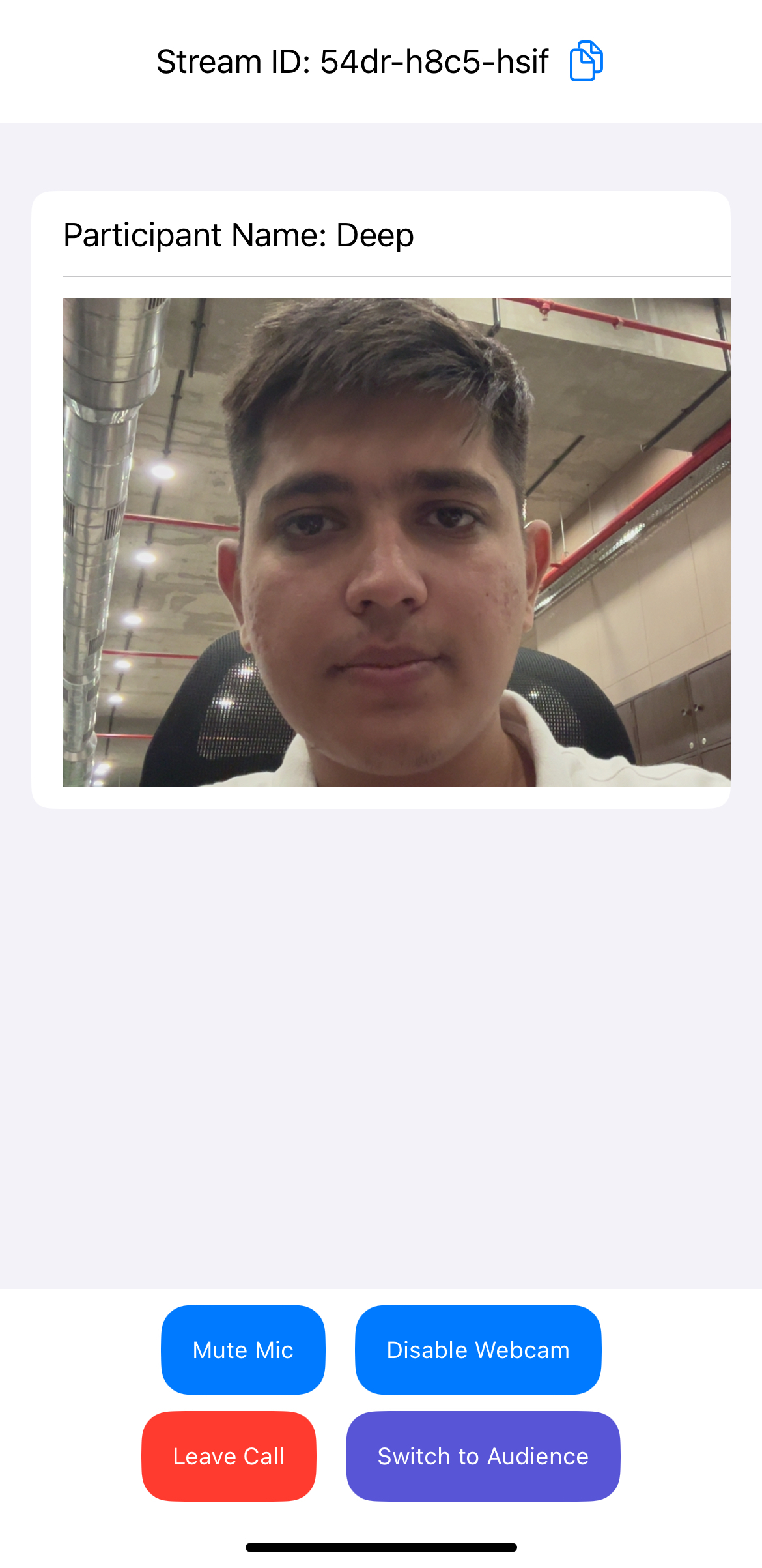

Output

Step 8: Extending Meeting Event Listeners

In this step, we extend the MeetingEventListener implementation in LiveStreamViewController by adding additional event listeners for enhanced meeting functionality.

These include onParticipantJoined and onParticipantLeft to handle participant updates, onMeetingStateChanged to manage meeting state transitions, and onParticipantModeChanged to track participant modes, allowing us to utilize them as needed.

- Swift

class LiveStreamViewController: ObservableObject {

//...

private var needsRefresh = false

//..

extension LiveStreamViewController: MeetingEventListener {

//...

func onParticipantJoined(_ participant: Participant) {

participants.append(participant)

// add listener

participant.addEventListener(self)

participant.setQuality(.high)

}

func onParticipantLeft(_ participant: Participant) {

participants = participants.filter({ $0.id != participant.id })

}

func onMeetingStateChanged(meetingState: MeetingState) {

switch meetingState {

case .CLOSED:

participants.removeAll()

default:

print("")

}

}

func onParticipantModeChanged(participantId: String, mode: Mode) {

DispatchQueue.main.async { [weak self] in

if var currentParticipants = self?.participants {

// Find and update the participant whose mode changed

if let index = currentParticipants.firstIndex(where: { $0.id == participantId }) {

currentParticipants[index].mode = mode

// Update the published array to trigger refresh

self?.participants = currentParticipants

}

}

}

}

}

//...

}

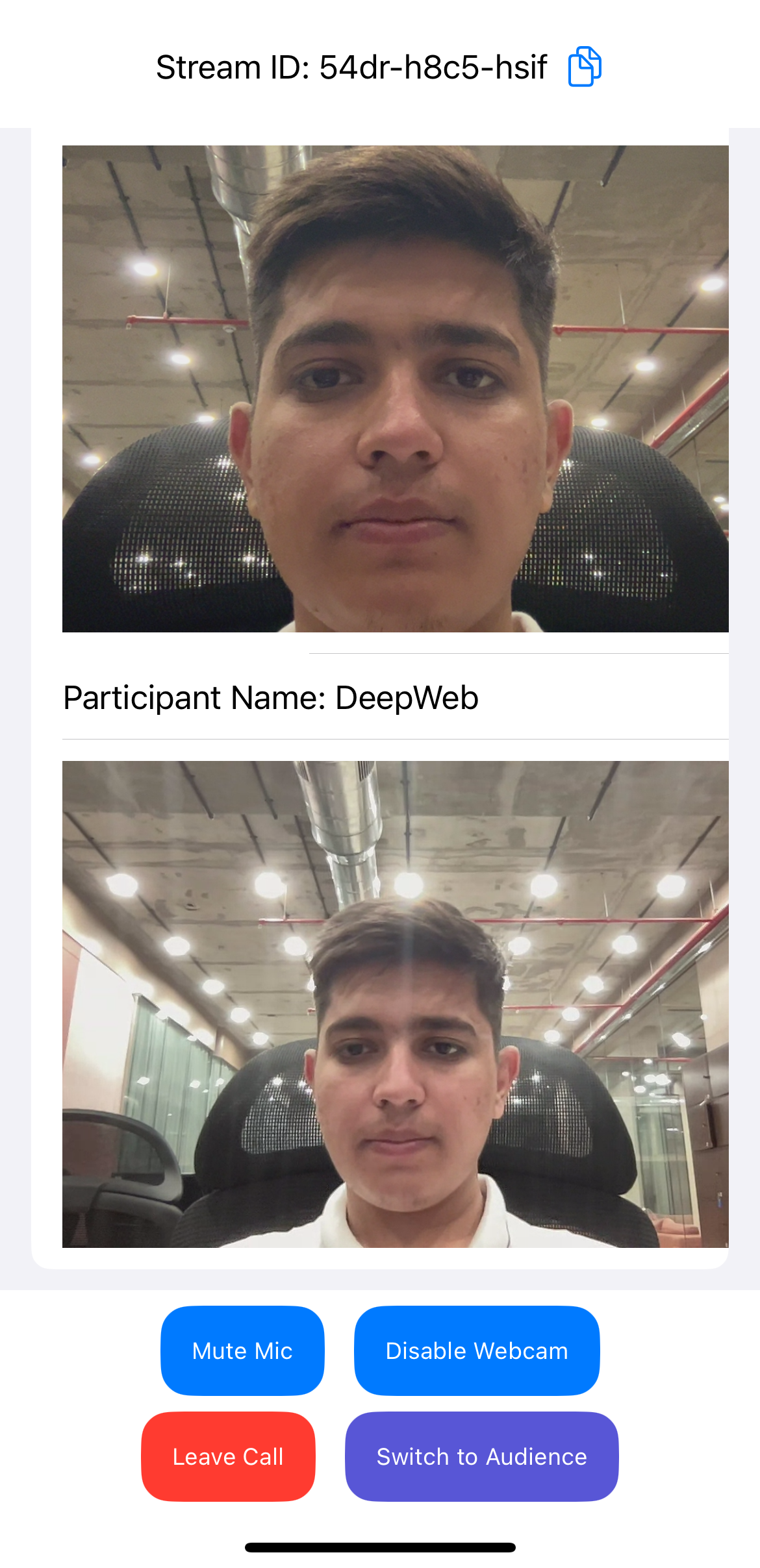

Output

Final Output

We are done with implementation of Interactive Live Streaming in iOS Appplication using Video SDK. To explore more features go through Basic and Advanced features.

Stuck anywhere? Check out this example code on GitHub

Got a Question? Ask us on discord