Transform Video - Python

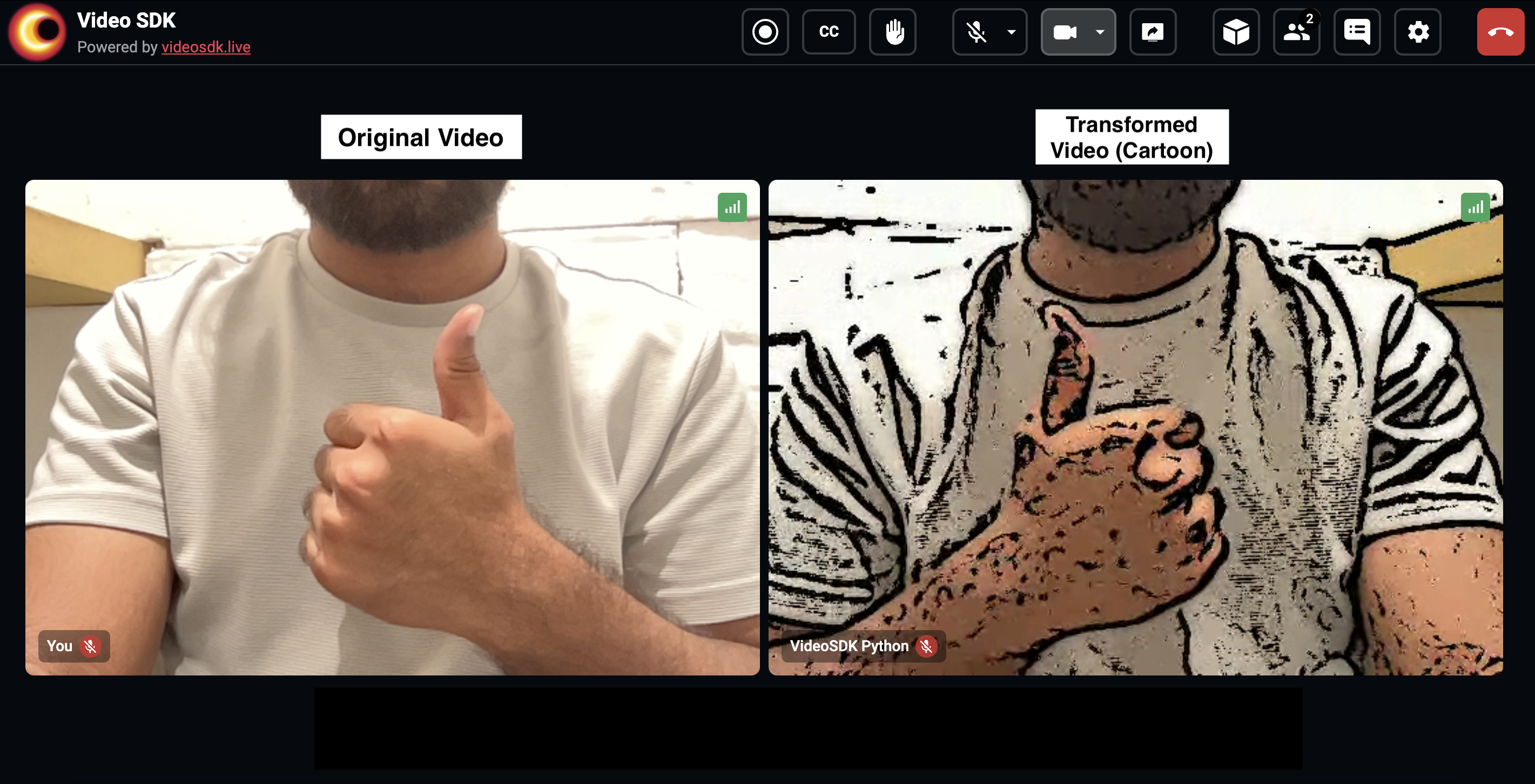

This guide will help you understand how to transform video frames in real-time using the VideoSDK. In this example, we'll transform video frames into a cartoon-like appearance.

Prerequisites

- Install necessary libraries:

pip install videosdk python-dotenv opencv-python av - Create a

.envfile and add your VideoSDK token, meeting ID, and name:VIDEOSDK_TOKEN=your_token

MEETING_ID=your_meeting_id

NAME=your_name

Code Breakdown

Imports and Constants

We start by importing necessary libraries and loading environment variables in transform_video.py file:

import asyncio

import os

from videosdk import MeetingConfig, VideoSDK, Participant, Stream, MeetingEventHandler, ParticipantEventHandler, CustomVideoTrack, Meeting

import mediapipe as mp

import cv2

from av import VideoFrame

from dotenv import load_dotenv

load_dotenv()

VIDEOSDK_TOKEN = os.getenv("VIDEOSDK_TOKEN")

MEETING_ID = os.getenv("MEETING_ID")

NAME = os.getenv("NAME")

loop = asyncio.get_event_loop()

meeting: Meeting = None

Transform Video Processor

This processor performs frame detection on each video frame and draws the cartoon-like anime and return the frame:

class TransformVideoProcessor():

def __init__(self) -> None:

print("Processor initialized")

def process(self, frame: VideoFrame) -> VideoFrame:

img = frame.to_ndarray(format="bgr24")

# prepare color

img_color = cv2.pyrDown(cv2.pyrDown(img))

for _ in range(6):

img_color = cv2.bilateralFilter(img_color, 9, 9, 7)

img_color = cv2.pyrUp(cv2.pyrUp(img_color))

# prepare edges

img_edges = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

img_edges = cv2.adaptiveThreshold(

cv2.medianBlur(img_edges, 7),

255,

cv2.ADAPTIVE_THRESH_MEAN_C,

cv2.THRESH_BINARY,

9,

2,

)

img_edges = cv2.cvtColor(img_edges, cv2.COLOR_GRAY2RGB)

# combine color and edges

img = cv2.bitwise_and(img_color, img_edges)

# rebuild a VideoFrame, preserving timing information

new_frame = VideoFrame.from_ndarray(img, format="bgr24")

new_frame.pts = frame.pts

new_frame.time_base = frame.time_base

return new_frame

CustomVideoTrack

Define a custom video track that will run the above processor when new frame received.

class ProcessedVideoTrack(CustomVideoTrack):

"""

A video stream track that transforms frames from an another track.

"""

kind = "video"

def __init__(self, track):

super().__init__() # don't forget this!

self.track = track

self.processor = TransformVideoProcessor()

async def recv(self):

frame = await self.track.recv()

new_frame = self.processor.process(frame)

return new_frame

Process on stream available

This function applies the ProcessedVideoTrack to a available video track:

def process_video(track: CustomVideoTrack):

global meeting

meeting.add_custom_video_track(

track=ProcessedVideoTrack(track=track)

)

Event Handlers

Define event handlers to handle meeting and participant events:

class MyMeetingEventHandler(MeetingEventHandler):

def __init__(self):

super().__init__()

def on_meeting_left(self, data):

print("on_meeting_left")

def on_participant_joined(self, participant: Participant):

participant.add_event_listener(

MyParticipantEventHandler(participant_id=participant.id)

)

def on_participant_left(self, participant: Participant):

print("on_participant_left")

class MyParticipantEventHandler(ParticipantEventHandler):

def __init__(self, participant_id: str):

super().__init__()

self.participant_id = participant_id

def on_stream_enabled(self, stream: Stream):

print("on_stream_enabled: " + stream.kind)

if stream.kind == "video":

process_video(track=stream.track)

def on_stream_disabled(self, stream: Stream):

print("on_stream_disabled")

Main Function

Initialize the meeting and start the event loop:

def main():

global meeting

# Example usage:

meeting_config = MeetingConfig(

meeting_id=MEETING_ID,

name=NAME,

mic_enabled=False,

webcam_enabled=False,

token=VIDEOSDK_TOKEN,

)

meeting = VideoSDK.init_meeting(**meeting_config)

print("adding event listener...")

meeting.add_event_listener(MyMeetingEventHandler())

print("joining into meeting...")

meeting.join()

if __name__ == "__main__":

main()

loop.run_forever()

Running the Code

To run the code, simply execute the script:

python transform_video.py

This script will join the meeting specified by MEETING_ID with the provided VIDEOSDK_TOKEN and NAME, and transform video frames in real-time using the cartoon filter.

Feel free to modify the transformation logic inside the process method of the VideoTransformTrack class to apply different kinds of video transformations.

Output

Check out this example code on GitHub, to Explore More Transformation like cartoon, edges, blur, face-blur, rotate

API Reference

The API references for all the methods and events utilized in this guide are provided below.

Got a Question? Ask us on discord