Quick Start for Http Live Streaming(HLS) in Javascript

VideoSDK empowers you to seamlessly integrate the Http Live Streaming(HLS) feature into your Javascript application within minutes.

In this quickstart, you'll explore this feature of VideoSDK. Follow the step-by-step guide to integrate it within your application.

For low-latency interactive live streaming (under 100ms), follow this documentation.

Prerequisites

Before proceeding, ensure that your development environment meets the following requirements:

- Video SDK Developer Account (Not having one, follow Video SDK Dashboard)

- Have Node and NPM installed on your device.

One should have a VideoSDK account to generate token. Visit VideoSDK dashboard to generate token

Getting Started with the Code!

Follow the steps to create the environment necessary to add video calls into your app. You can also find the code sample for quickstart here.

Install Video SDK

Import VideoSDK using the <script> tag or Install it using the following npm command. Make sure you are in your app directory before you run this command.

- <script>

- npm

- yarn

<html>

<head>

<!--.....-->

</head>

<body>

<!--.....-->

<script src="https://sdk.videosdk.live/js-sdk/0.1.6/videosdk.js"></script>

</body>

</html>

npm install @videosdk.live/js-sdk

yarn add @videosdk.live/js-sdk

Structure of the project

Your project structure should look like this.

root

├── index.html

├── config.js

├── index.js

You will be working on the following files:

- index.html: Responsible for creating a basic UI.

- config.js: Responsible for storing the token.

- index.js: Responsible for rendering the meeting view and the join meeting functionality.

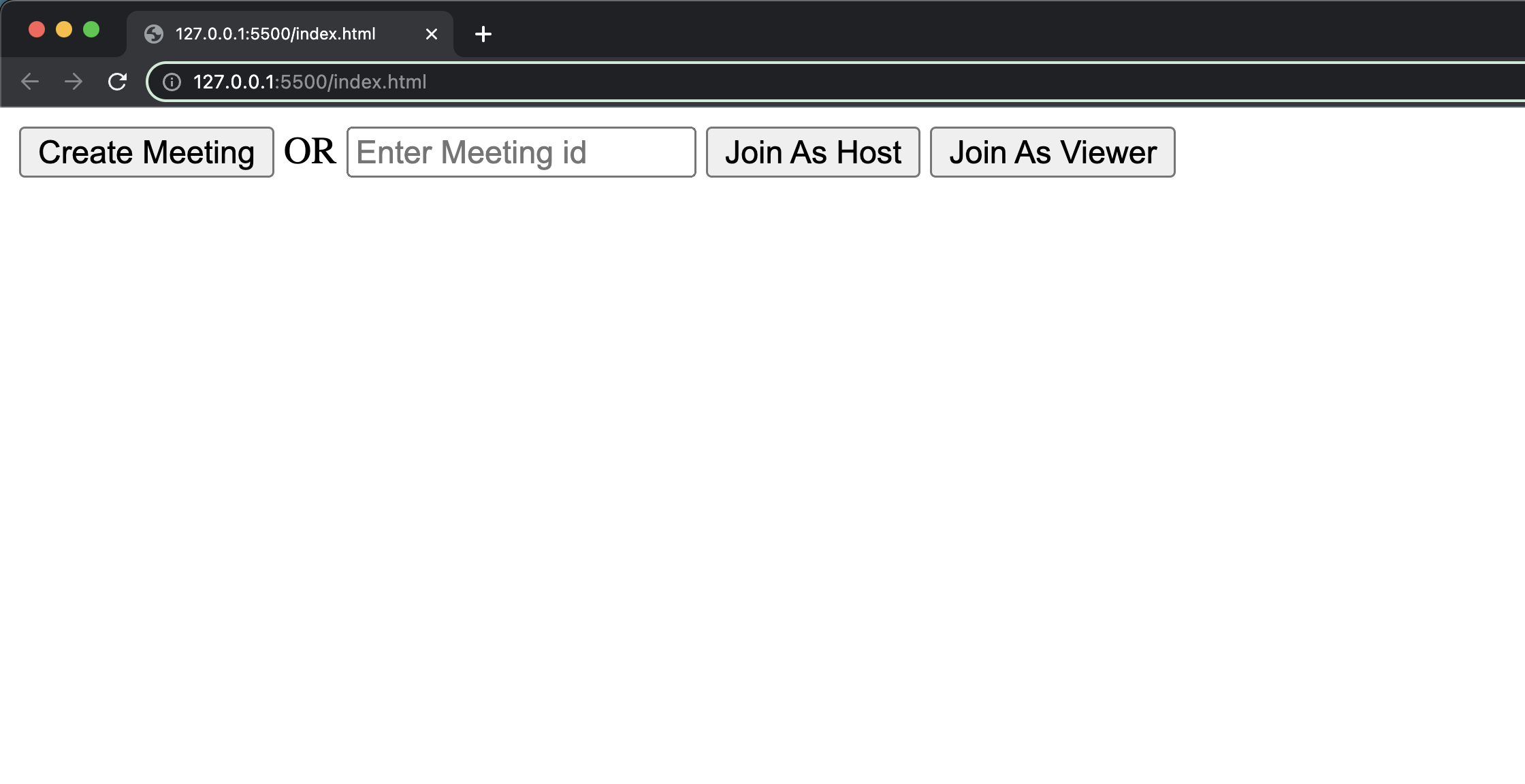

Step 1: Design the user interface (UI)

Create an HTML file containing the screens, join-screen and grid-screen.

<!DOCTYPE html>

<html>

<head> </head>

<body>

<div id="join-screen">

<!-- Create new Meeting Button -->

<button id="createMeetingBtn">Create Meeting</button>

OR

<!-- Join existing Meeting -->

<input type="text" id="meetingIdTxt" placeholder="Enter Meeting id" />

<button id="joinHostBtn">Join As Host</button>

<button id="joinViewerBtn">Join As Viewer</button>

</div>

<!-- for Managing meeting status -->

<div id="textDiv"></div>

<div id="grid-screen" style="display: none">

<!-- To Display MeetingId -->

<h3 id="meetingIdHeading"></h3>

<h3 id="hlsStatusHeading"></h3>

<div id="speakerView" style="display: none">

<!-- Controllers -->

<button id="leaveBtn">Leave</button>

<button id="toggleMicBtn">Toggle Mic</button>

<button id="toggleWebCamBtn">Toggle WebCam</button>

<button id="startHlsBtn">Start HLS</button>

<button id="stopHlsBtn">Stop HLS</button>

</div>

<!-- render Video -->

<div id="videoContainer"></div>

</div>

<script src="https://sdk.videosdk.live/js-sdk/0.1.6/videosdk.js"></script>

<script src="config.js"></script>

<script src="index.js"></script>

<!-- hls lib script -->

<script src="https://cdn.jsdelivr.net/npm/hls.js"></script>

</body>

</html>

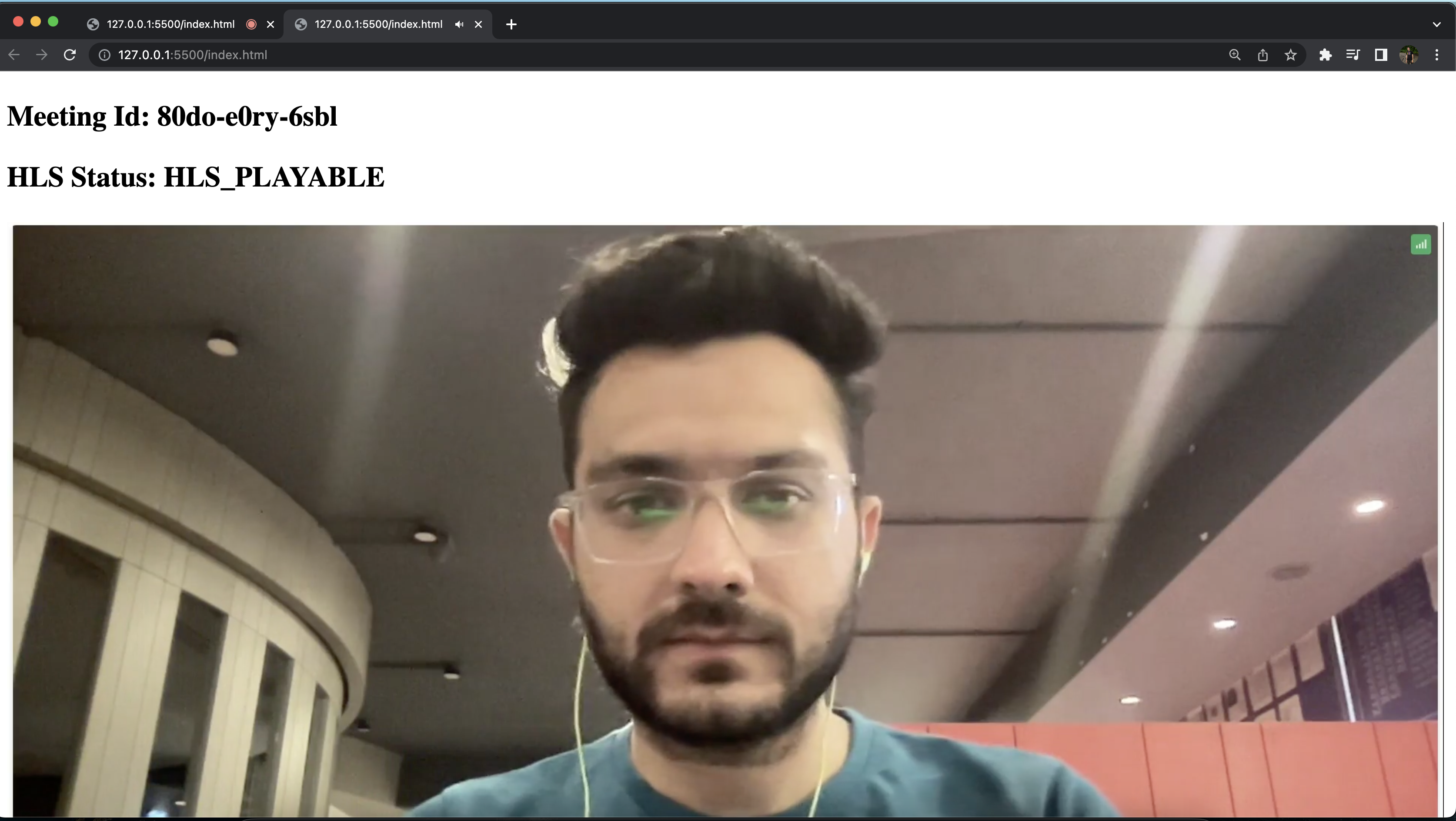

Output

Step 2: Implement Join Screen

Configure the token in the config.js file, which you can obtain from the VideoSDK Dashbord.

// Auth token will be used to generate a meeting and connect to it

TOKEN = "Your_Token_Here";

Next, retrieve all the elements from the DOM and declare the following variables in the index.js file. Then, add an event listener to the join and create meeting buttons.

Join screen will serve as a medium to either schedule a new meeting or join an existing one as a host or a viewer.

This functionality will have 3 buttons:

1. Join as Host: When this button is clicked, the person will join the meeting with the entered meetingId as HOST.

2. Join as Viewer: When this button is clicked, the person will join the meeting with the entered meetingId as SIGNALLING_ONLY.

3. Create Meeting: When this button is clicked, the person will join a new meeting as HOST.

// getting Elements from Dom

const joinHostButton = document.getElementById("joinHostBtn");

const joinViewerButton = document.getElementById("joinViewerBtn");

const leaveButton = document.getElementById("leaveBtn");

const startHlsButton = document.getElementById("startHlsBtn");

const stopHlsButton = document.getElementById("stopHlsBtn");

const toggleMicButton = document.getElementById("toggleMicBtn");

const toggleWebCamButton = document.getElementById("toggleWebCamBtn");

const createButton = document.getElementById("createMeetingBtn");

const videoContainer = document.getElementById("videoContainer");

const textDiv = document.getElementById("textDiv");

const hlsStatusHeading = document.getElementById("hlsStatusHeading");

// declare Variables

let meeting = null;

let meetingId = "";

let isMicOn = false;

let isWebCamOn = false;

const Constants = VideoSDK.Constants;

function initializeMeeting() {}

function createLocalParticipant() {}

function createVideoElement() {}

function createAudioElement() {}

function setTrack() {}

// Join Meeting As Host Button Event Listener

joinHostButton.addEventListener("click", async () => {

document.getElementById("join-screen").style.display = "none";

textDiv.textContent = "Joining the meeting...";

roomId = document.getElementById("meetingIdTxt").value;

meetingId = roomId;

initializeMeeting(Constants.modes.SEND_AND_RECV);

});

// Join Meeting As Viewer Button Event Listener

joinViewerButton.addEventListener("click", async () => {

document.getElementById("join-screen").style.display = "none";

textDiv.textContent = "Joining the meeting...";

roomId = document.getElementById("meetingIdTxt").value;

meetingId = roomId;

initializeMeeting(Constants.modes.SIGNALLING_ONLY);

});

// Create Meeting Button Event Listener

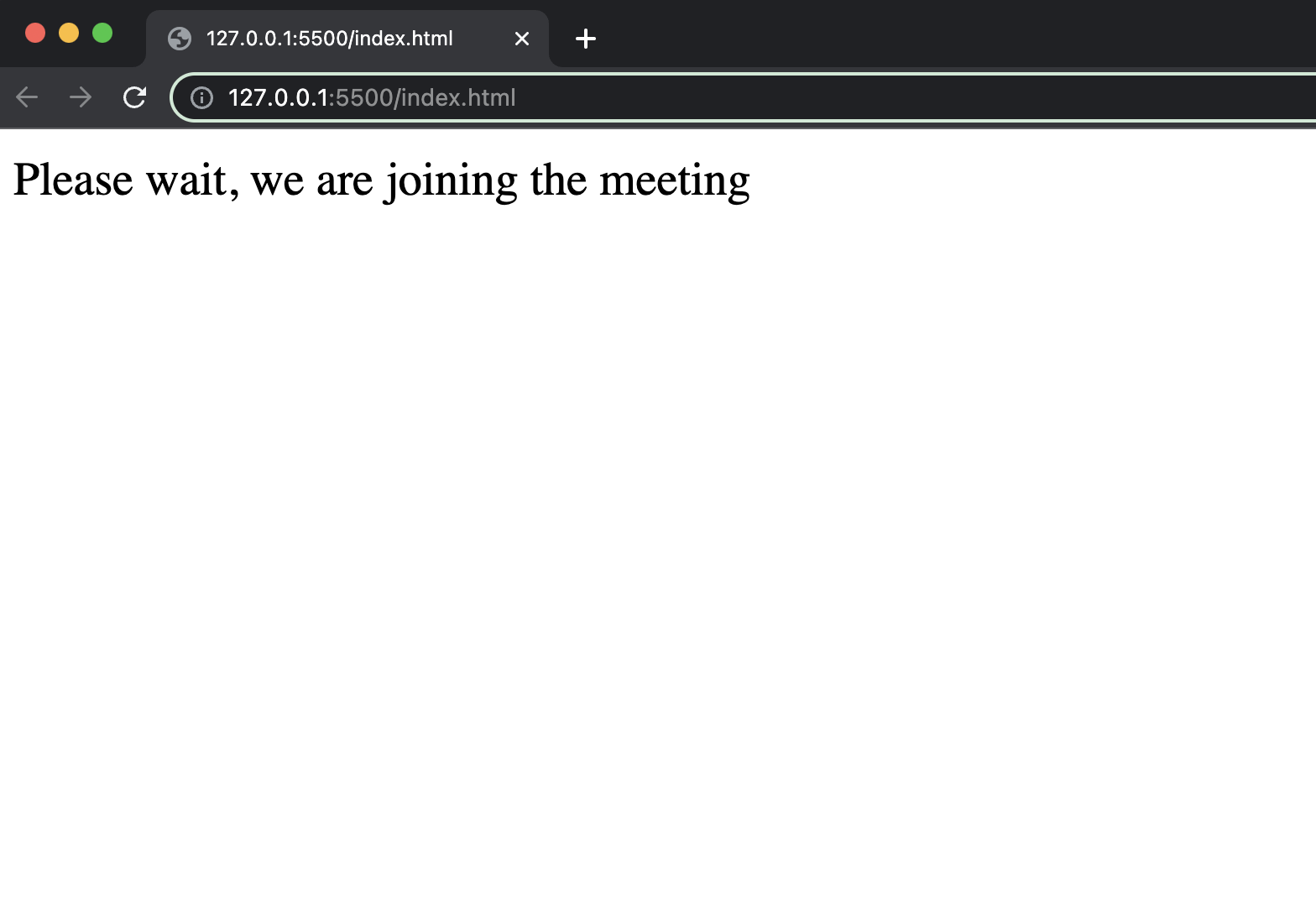

createButton.addEventListener("click", async () => {

document.getElementById("join-screen").style.display = "none";

textDiv.textContent = "Please wait, we are joining the meeting";

const url = `https://api.videosdk.live/v2/rooms`;

const options = {

method: "POST",

headers: { Authorization: TOKEN, "Content-Type": "application/json" },

};

const { roomId } = await fetch(url, options)

.then((response) => response.json())

.catch((error) => alert("error", error));

meetingId = roomId;

initializeMeeting(Constants.modes.SEND_AND_RECV);

});

Output

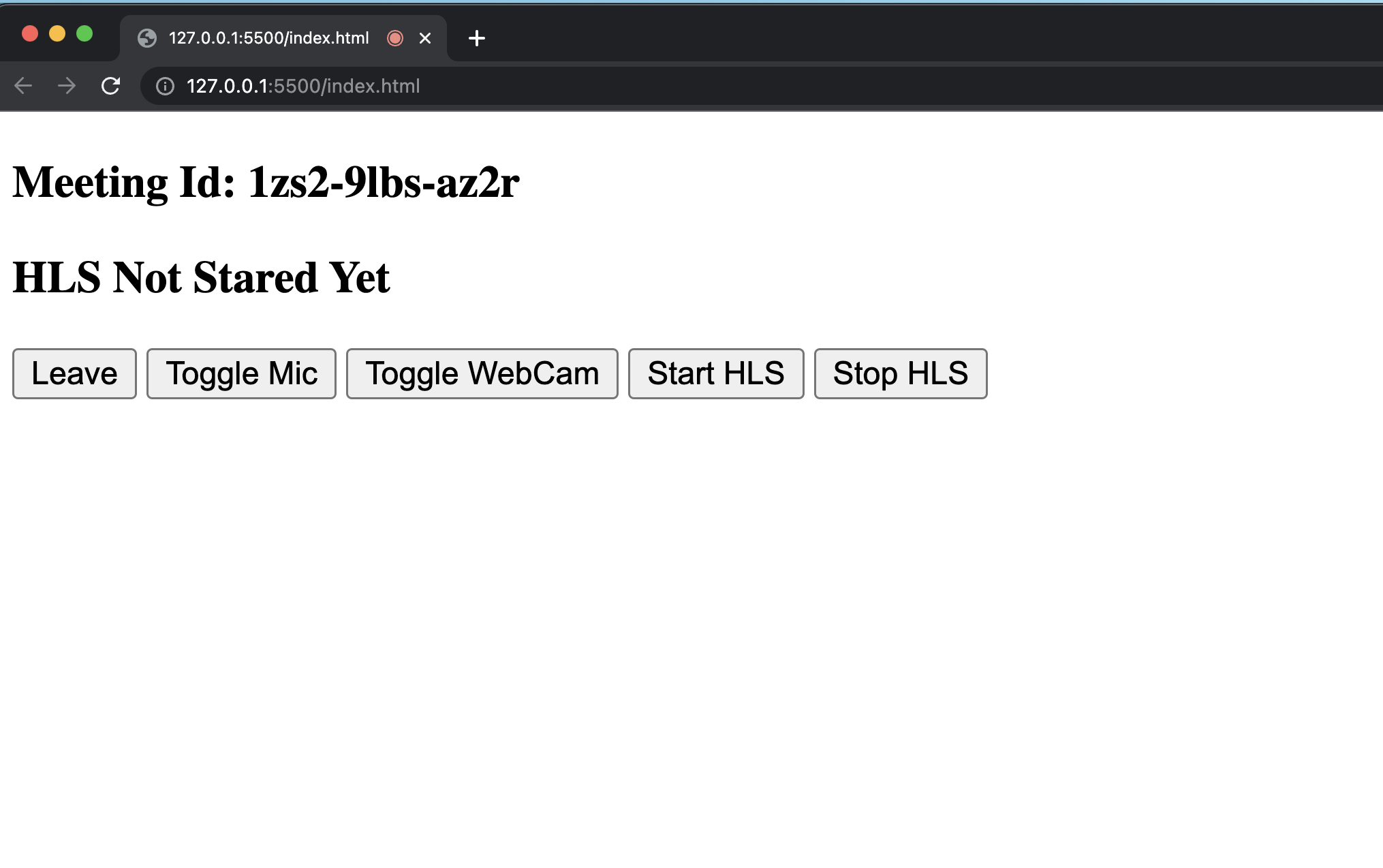

Step 3: Initialize meeting

Initialize the meeting based on the mode passed to the function and only create a local participant stream when the mode is SEND_AND_RECV.

// Initialize meeting

function initializeMeeting(mode) {

window.VideoSDK.config(TOKEN);

meeting = window.VideoSDK.initMeeting({

meetingId: meetingId, // required

name: "Thomas Edison", // required

mode: mode,

});

meeting.join();

meeting.on("meeting-joined", () => {

textDiv.textContent = null;

document.getElementById("grid-screen").style.display = "block";

document.getElementById(

"meetingIdHeading"

).textContent = `Meeting Id: ${meetingId}`;

if (meeting.hlsState === Constants.hlsEvents.HLS_STOPPED) {

hlsStatusHeading.textContent = "HLS has not stared yet";

} else {

hlsStatusHeading.textContent = `HLS Status: ${meeting.hlsState}`;

}

if (mode === Constants.modes.SEND_AND_RECV) {

// Pin the local participant if he joins in `SEND_AND_RECV` mode

meeting.localParticipant.pin();

document.getElementById("speakerView").style.display = "block";

}

});

meeting.on("meeting-left", () => {

videoContainer.innerHTML = "";

});

meeting.on("hls-state-changed", (data) => {

//

});

if (mode === Constants.modes.SEND_AND_RECV) {

// creating local participant

createLocalParticipant();

// setting local participant stream

meeting.localParticipant.on("stream-enabled", (stream) => {

setTrack(stream, null, meeting.localParticipant, true);

});

// participant joined

meeting.on("participant-joined", (participant) => {

if (participant.mode === Constants.modes.SEND_AND_RECV) {

participant.pin();

let videoElement = createVideoElement(

participant.id,

participant.displayName

);

participant.on("stream-enabled", (stream) => {

setTrack(stream, audioElement, participant, false);

});

let audioElement = createAudioElement(participant.id);

videoContainer.appendChild(videoElement);

videoContainer.appendChild(audioElement);

}

});

// participants left

meeting.on("participant-left", (participant) => {

let vElement = document.getElementById(`f-${participant.id}`);

vElement.remove(vElement);

let aElement = document.getElementById(`a-${participant.id}`);

aElement.remove(aElement);

});

}

}

Output

Step 4: Speaker Controls

Next step is to create SpeakerView and Controls components to manage features such as join, leave, mute and unmute.

You have to retrieve all the participants using the meeting object and filter them based on the mode set to SEND_AND_RECV ensuring that only Speakers are displayed on the screen.

// leave Meeting Button Event Listener

leaveButton.addEventListener("click", async () => {

meeting?.leave();

document.getElementById("grid-screen").style.display = "none";

document.getElementById("join-screen").style.display = "block";

});

// Toggle Mic Button Event Listener

toggleMicButton.addEventListener("click", async () => {

if (isMicOn) {

// Disable Mic in Meeting

meeting?.muteMic();

} else {

// Enable Mic in Meeting

meeting?.unmuteMic();

}

isMicOn = !isMicOn;

});

// Toggle Web Cam Button Event Listener

toggleWebCamButton.addEventListener("click", async () => {

if (isWebCamOn) {

// Disable Webcam in Meeting

meeting?.disableWebcam();

let vElement = document.getElementById(`f-${meeting.localParticipant.id}`);

vElement.style.display = "none";

} else {

// Enable Webcam in Meeting

meeting?.enableWebcam();

let vElement = document.getElementById(`f-${meeting.localParticipant.id}`);

vElement.style.display = "inline";

}

isWebCamOn = !isWebCamOn;

});

// Start Hls Button Event Listener

startHlsButton.addEventListener("click", async () => {

meeting?.startHls({

layout: {

type: "SPOTLIGHT",

priority: "PIN",

gridSize: "20",

},

theme: "LIGHT",

mode: "video-and-audio",

quality: "high",

orientation: "landscape",

});

});

// Stop Hls Button Event Listener

stopHlsButton.addEventListener("click", async () => {

meeting?.stopHls();

});

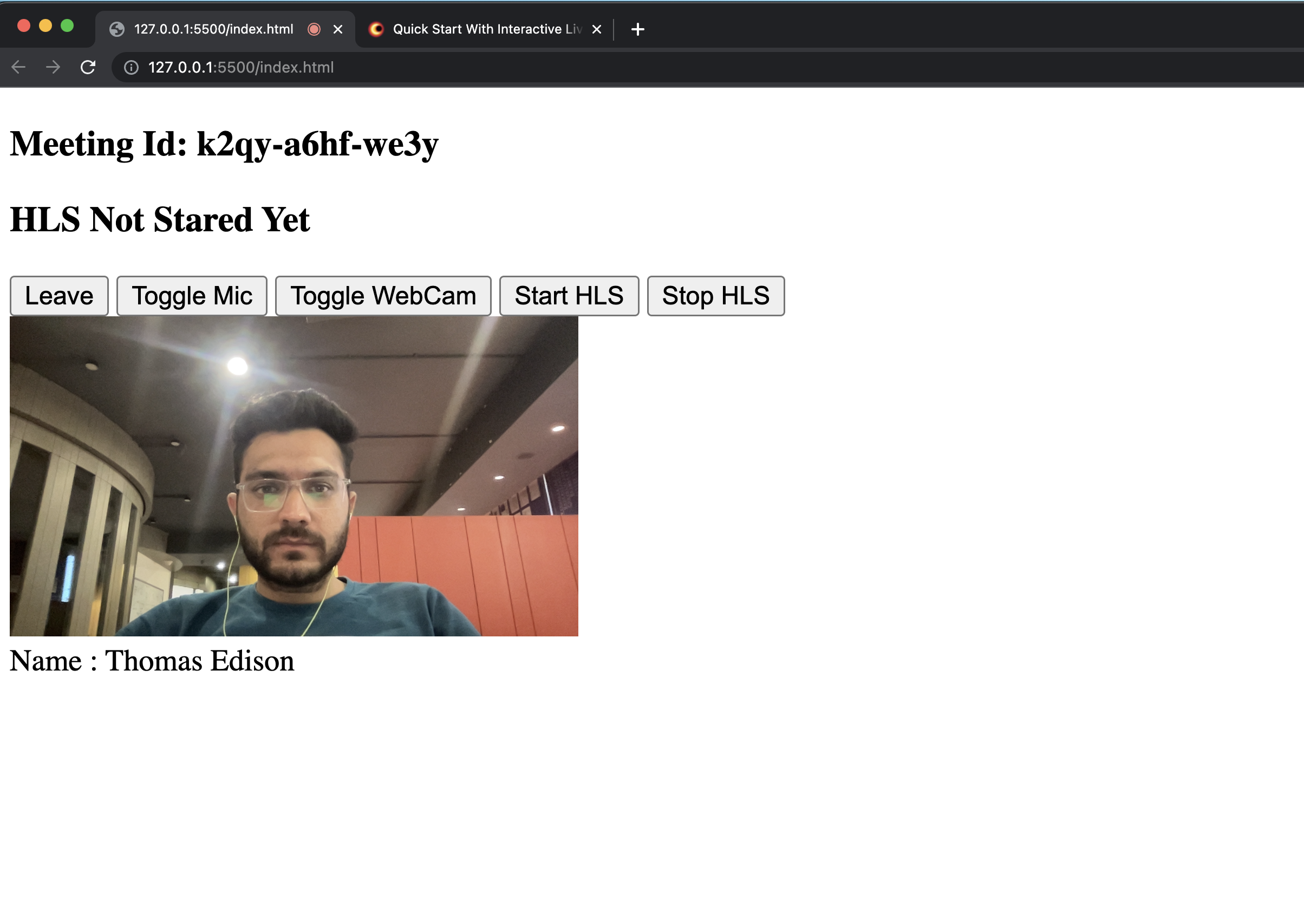

Step 5: Speaker Media Elements

In this step, Create a function to generate audio and video elements for displaying both local and remote participants for the speaker. Set the corresponding media track based on whether it's a video or audio stream.

// creating video element

function createVideoElement(pId, name) {

let videoFrame = document.createElement("div");

videoFrame.setAttribute("id", `f-${pId}`);

//create video

let videoElement = document.createElement("video");

videoElement.classList.add("video-frame");

videoElement.setAttribute("id", `v-${pId}`);

videoElement.setAttribute("playsinline", true);

videoElement.setAttribute("width", "300");

videoFrame.appendChild(videoElement);

let displayName = document.createElement("div");

displayName.innerHTML = `Name : ${name}`;

videoFrame.appendChild(displayName);

return videoFrame;

}

// creating audio element

function createAudioElement(pId) {

let audioElement = document.createElement("audio");

audioElement.setAttribute("autoPlay", "false");

audioElement.setAttribute("playsInline", "true");

audioElement.setAttribute("controls", "false");

audioElement.setAttribute("id", `a-${pId}`);

audioElement.style.display = "none";

return audioElement;

}

// creating local participant

function createLocalParticipant() {

let localParticipant = createVideoElement(

meeting.localParticipant.id,

meeting.localParticipant.displayName

);

videoContainer.appendChild(localParticipant);

}

// setting media track

function setTrack(stream, audioElement, participant, isLocal) {

if (stream.kind == "video") {

isWebCamOn = true;

const mediaStream = new MediaStream();

mediaStream.addTrack(stream.track);

let videoElm = document.getElementById(`v-${participant.id}`);

videoElm.srcObject = mediaStream;

videoElm

.play()

.catch((error) =>

console.error("videoElem.current.play() failed", error)

);

}

if (stream.kind == "audio") {

if (isLocal) {

isMicOn = true;

} else {

const mediaStream = new MediaStream();

mediaStream.addTrack(stream.track);

audioElement.srcObject = mediaStream;

audioElement

.play()

.catch((error) => console.error("audioElem.play() failed", error));

}

}

}

Output

Step 6: Implement ViewerView

When the host initiates the live streaming, viewers will be able to watch it.

To implement the player view, you have to use hls.js. It will be helpful for playing the HLS stream. The script of hls.js file already added in the index.html file.

Now on the hls-state-changed event, when participant mode is set to SIGNALLING_ONLY and the status of hls is HLS_PLAYABLE, we will pass the playbackHlsUrl to the hls.js and play it.

downstreamUrl is now depecated. Use playbackHlsUrl or livestreamUrl in place of downstreamUrl

// Initialize meeting

function initializeMeeting() {

// ...

// hls-state-chnaged event

meeting.on("hls-state-changed", (data) => {

const { status } = data;

hlsStatusHeading.textContent = `HLS Status: ${status}`;

if (mode === Constants.modes.SIGNALLING_ONLY) {

if (status === Constants.hlsEvents.HLS_PLAYABLE) {

const { playbackHlsUrl } = data;

let video = document.createElement("video");

video.setAttribute("width", "100%");

video.setAttribute("muted", "false");

// enableAutoPlay for browser autoplay policy

video.setAttribute("autoplay", "true");

if (Hls.isSupported()) {

var hls = new Hls({

maxLoadingDelay: 1, // max video loading delay used in automatic start level selection

defaultAudioCodec: "mp4a.40.2", // default audio codec

maxBufferLength: 0, // If buffer length is/become less than this value, a new fragment will be loaded

maxMaxBufferLength: 1, // Hls.js will never exceed this value

startLevel: 0, // Start playback at the lowest quality level

startPosition: -1, // set -1 playback will start from intialtime = 0

maxBufferHole: 0.001, // 'Maximum' inter-fragment buffer hole tolerance that hls.js can cope with when searching for the next fragment to load.

highBufferWatchdogPeriod: 0, // if media element is expected to play and if currentTime has not moved for more than highBufferWatchdogPeriod and if there are more than maxBufferHole seconds buffered upfront, hls.js will jump buffer gaps, or try to nudge playhead to recover playback.

nudgeOffset: 0.05, // In case playback continues to stall after first playhead nudging, currentTime will be nudged evenmore following nudgeOffset to try to restore playback. media.currentTime += (nb nudge retry -1)*nudgeOffset

nudgeMaxRetry: 1, // Max nb of nudge retries before hls.js raise a fatal BUFFER_STALLED_ERROR

maxFragLookUpTolerance: 0.1, // This tolerance factor is used during fragment lookup.

liveSyncDurationCount: 1, // if set to 3, playback will start from fragment N-3, N being the last fragment of the live playlist

abrEwmaFastLive: 1, // Fast bitrate Exponential moving average half-life, used to compute average bitrate for Live streams.

abrEwmaSlowLive: 3, // Slow bitrate Exponential moving average half-life, used to compute average bitrate for Live streams.

abrEwmaFastVoD: 1, // Fast bitrate Exponential moving average half-life, used to compute average bitrate for VoD streams

abrEwmaSlowVoD: 3, // Slow bitrate Exponential moving average half-life, used to compute average bitrate for VoD streams

maxStarvationDelay: 1, // ABR algorithm will always try to choose a quality level that should avoid rebuffering

});

hls.loadSource(playbackHlsUrl);

hls.attachMedia(video);

hls.on(Hls.Events.MANIFEST_PARSED, function () {

video.play();

});

} else if (video.canPlayType("application/vnd.apple.mpegurl")) {

video.src = playbackHlsUrl;

video.addEventListener("canplay", function () {

video.play();

});

}

videoContainer.appendChild(video);

}

if (status === Constants.hlsEvents.HLS_STOPPING) {

videoContainer.innerHTML = "";

}

}

});

}

Output

Final Output

You have completed the implementation of a customised live streaming app in Javascript using VideoSDK. To explore more features, go through Basic and Advanced features.

You can checkout the complete quick start example here.

Got a Question? Ask us on discord